Shantanu S. Sinha, R.K. Satzoda, S. Suchitra, T. Srikanthan, "Additive Hough Transform on Embedded Computing Platforms", at the 56th IEEE International Midwest Symposium on Circuits and Systems (MWSCAS). Columbus, Ohio, USA. 2013. (pdf)

Driver assistance systems such as lane departure warning, road sign reading, pedestrian detection etc. are becoming virtually ubiquitous in the high-end luxury sedan market. Most of these systems rely heavily on image/signal processing routines and contain hardware quite different from what these routines were originally designed for. In particular, we consider the case of the Hough Transform which, by default, supports only angle-level parallelism. Hough Transform computation therefore requires a large number of cycles per image, which limits its usability in real-time capable applications.

The Hough Transform is one of the most commonly used feature extraction techniques. It works with any form of parametric shape and is insensitive to shifts in orientation or mild occlusion. This makes it an essential component of almost every image processing application. In automobiles, in particular, the linear Hough Transform finds wide use in lane detection systems. Lane detection systems form a core component of driver assistance systems as well as autonomous vehicles. In spite of being such a core component of image processing, the Hough Transform remains computationally demanding, requiring evaluation of transcendental functions and involves a large per-image latency. This greatly limits its use in real-time capable systems. A fast variant of the Hough Transform could be coupled with learning-based techniques or syntactic pattern recognition to realize an entire range of real-time applications.

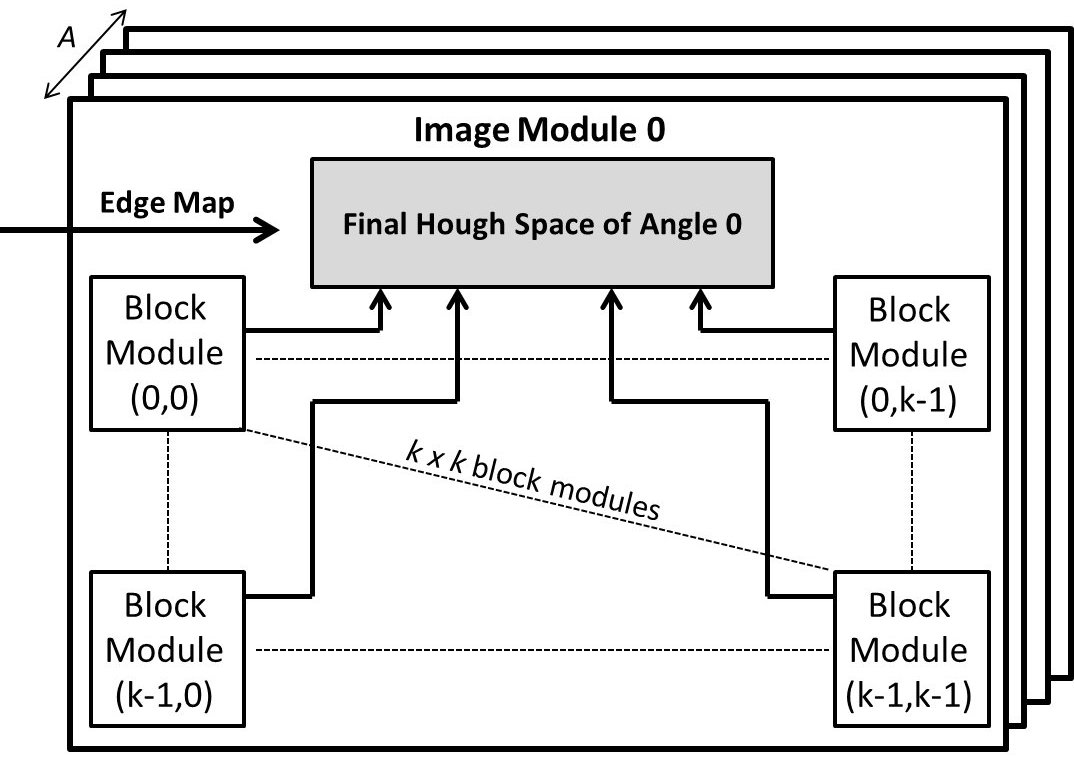

Most existing Hough Transform variants support only angle-level parallelism. For an image of size $n$x$n$, this would involve $O(n^2)$ computations. If we divide this image into a grid of $k\times k$ blocks, each of size $m\times m$ (where $m = n/k$) and find some way to process all these blocks in parallel, we can compute the Hough Transform of the image in just $O(m^2)$ cycles. In practice, depending on the size of $k$, this could lead to a reduction in processing time by nearly two orders of magnitude. This is the central idea behind the Additive Hough Transform (AHT). We verified this theory by implementing AHT on a wide range of computing platforms (multicore CPUs, GPUs, FPGAs) and demonstrated its effectiveness.

Our implementation of AHT generates exactly the same Hough space as the regular Hough Transform, while executing a full order of magnitude faster on a multicore CPU. In practice, we realize faster computation in both serial and parallel environments, with a serial AHT implementation performing 140% faster than the comparable OpenCV implementation of the Hough Transform. This figure increases to 160% in the case of a parallel AHT implementation, when compared to a row-wise parallel Hough Transform implementation in software. Additionally, with GPU acceleration, we succeeded in achieving over 240fps on a commodity nVIDIA GT630M notebook graphics card.

Since AHT was primarily designed for embedded systems, we also evaluated its performance on a Xilinx Virtex7 FPGA. In hardware applications, if the range of angles for which the HT is to be computed is known a priori, LUTs can be used and, in such cases, AHT implementation was found to be 35$\times$ faster than the comparable conventional HT implementation at the highest allowable clock speed.

In applications in which the range of angles for which HT is to be computed is known only at runtime, LUTs cannot be used. In such cases, CORDIC-based implementations could be used, with CORDIC-based AHT implementations being 21$\times$ faster than their regular HT counterparts.

The Hough Transform is a feature extraction technique used to detect instances of parametric curves through a voting procedure in parameter space. It transforms a pixel $P(x,y)$ in Cartesian space to a sinusoidal curve in the Hough Space indexed by $\rho$-$\theta$ through the following relation -

In this manner, for each line passing through $(x,y)$, by varying $\theta$ we arrive at a different value $\rho$, representing a different sinusoid in the Hough Space. If we do this for all the edge pixels in the image, collecting the $(\rho, \theta)$ coordinates in an accumulator matrix, we can easily determine which lines (each $(\rho, \theta)$ pair in the Hough Space corresponds to a line through $(x,y)$ in the Cartesian space) are prominent in the image through simple thresholding.

It can be seen that the Hough Transform is computationally quite demanding, requiring evaluation of transcendental functions. Additionally, we must compute $\rho$ for each $\theta$ in the specified range of angles for each edge pixel. Doing so, we would incur a large per-image latency, drastically reducing frame rates.

The Additive Hough Transform (AHT) is a block-based parallel variant of the regular Hough Transform. We divide the image into a grid comprising $k\times k$ blocks, each of size $\times $, where $m = n/k$, $n$ being the width (or height) of the image. The assumption of a square image is purely for simplicity of explanation, AHT being perfectly valid for rectangular images as well.

The HT of any edge pixel in the block can be calculated as the sum of the HT value of the edge pixel with respect to (w.r.t) the local origin of the block it belongs to and the HT value of this local origin w.r.t the global origin of the image. This property is called the additive property of HT represented as -

Most importantly, the Hough Space computed using AHT is mathematically equivalent to the Hough Space computed using the regular Hough Transform. We, hence, detect the exact same lines as the regular HT, albeit faster. There is no compromise on accuracy for added speed.

One of the primary drawbacks of AHT is the compulsory atomic access to the final Hough Space. Thus, even if the Hough Transform within the blocks are computed in parallel, these individual Hough Spaces must then be summed in serial, requiring a number of excess cycles. On observation it can be seen that it is necessary to compute the Hough Transform of a pixel only at the gradient angle at that pixel in the edge map (the O'Gorman Hough Transform). We can exploit this property to parallelize summation of the local Hough Spaces of each block. This work is currently in progress and can be viewed here.