This work was sponsored by Infineon R&D, Singapore

The automobile industry, today, makes massive use of computing hardware. Automatic traction control, active suspension systems, augmented night vision systems, lane departure warning systems - all require high-end microcontrollers for smooth operation. Today, high-end digital signal processing (DSP) are available with clock speeds in excess of 1GHz, fast-access two-level cache systems, DMA circuitry etc. One such device is the Infineon TriCore, which unites the elements of an RISC processor core, a microcontroller and a DSP board in a single package.

While DSP boards have undergone numerous improvements over the years, one key drawback remains - they are inherently serial processors. Just as most computer CPUs today come coupled with a dedicated GPU for massively parallel computations, manufacturers are looking to find co-processors that can be coupled with DSP boards to speed up inherently parallel computations. Field programming gate arrays (FPGAs) are reprogrammable semiconductor devices that are based around a matrix of configurable logic blocks (CLBs) surrounded by a periphery of I/O blocks. Since they are essentially huge fields of programmable gates, they can be programmed into multiple parallel hardware paths. This makes them an ideal choice as co-processors for parallel computations.

The TriCore is a dual issue machine in the sense that it is capable of executing two instructions in a single clock cycle, provided the first instruction is issued to the Integer Processing (IP) unit, followed by an instruction issued to the Load/Store unit. However, it is a "static" dual issue machine, i.e., it is capable of executing the two instructions in parallel only if they are scheduled in that manner at compile time. This is in contrast to dynamic dual issue machines, also known as superscalar CPUs or Hyper-Threading, wherein the instructions are scheduled for parallel execution dynamically at runtime. Thus, it is possible to achieve tremendous performance gains during runtime if routines are optimized to take advantage of the TriCore's architecture at compile time.

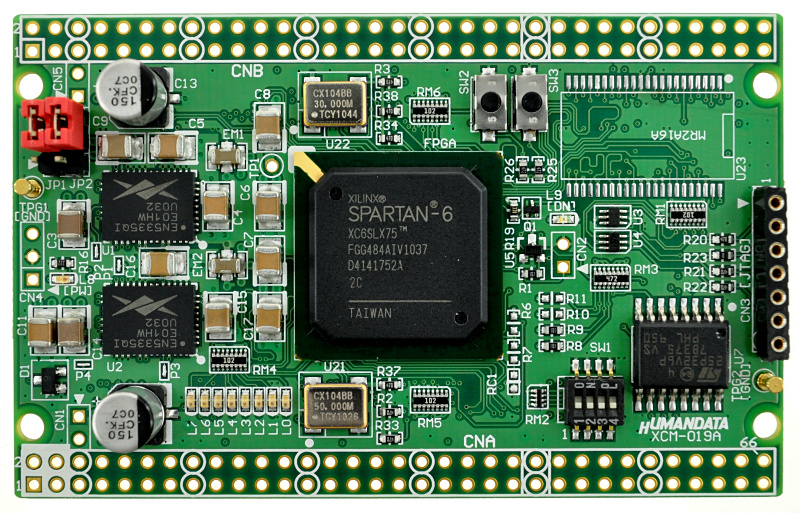

Many signal and image processing routines are almost "embarrassingly parallel". On computers, these routines are generally executed by powerful dedicated GPUs capable of executing at times even thousands of instructions in a single clock cycle. We, thus, expect huge performance gains if we couple the TriCore with specialized hardware for inherently parallel computations. To this effect, we coupled the TriCore with a low-power Xilinx Spartan 6 FPGA.

The entire set up is displayed on the right. The hardware platform comprises the TriCore, the Spartan 6 FPGA board and a low-voltage inverter board from Infineon’s FOC kit. Some extra elements exist for extended Kalman filter based sensorless motor control - something that I did not work on, though we used the same hardware.

Data transfer between the TriCore and the FPGA is facilitated via a 32-bit interface between the TC1797 TriCore processor and FPGA module through the external bus unit (EBU). The FPGA is memory-mapped to 1K locations in the chip select CS2 address space. The C code running on TriCore processor reads and writes 32-bit data to the contents of register memories in FPGA as demultiplexed EBU bus transactions.

We first profiled code for standard DSP routines using the Eclipse-based Tasking IDE. We then identified specific routines that could be rewritten so as to incorporate some common elements - like matrix multiplication or convolution. These routines were then interfaced with the FPGA board to analyze performance.

Routines that did not contain these "embarrassingly parallel" segments were optimized at the assembly level for execution on the TriCore. Finally, we reordered instructions so as to take advantage of the TriCore's dual issue nature.

We experimentally verified the effectiveness of the co-processor using execution time of the Hough Transform as a metric. Since the Hough Transform is not directly suited to parallel architecture, we used the Additive Hough Transform instead. The Additive Hough Transform is a block-based parallel implementation that produces the exact same Hough Space as the regular Hough Transform, and hence detects the same lines.