A small selection of recent projects I’ve either developed or been otherwise involved in.

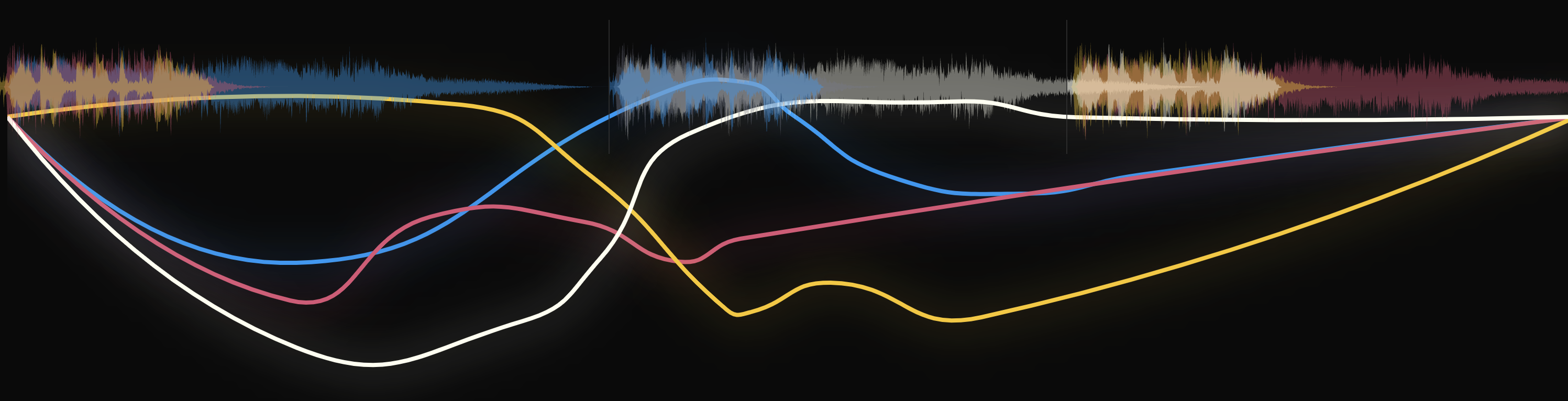

Sifting Sound (In Progress)

A new software ecosystem on the web for working creatively with large audio collections. More info to come; this is my master’s thesis project.

Tools used (so far): Python, Flask, Essentia, Javascript, ReactJS, d3.js, Konva, Web Audio API, PostgreSQL (with Psycopg2), UMAP

My roles: Software Development, UX Design, Data Analysis, Algorithm Design, Database Administration

The Sifter (2019)

A system that allows a user to interactively query a long audio file for similar “sound objects” to a provided target sound, or to part of the long sound itself treated as a target (selected by the user).

This is a small part of ongoing work.

Tools used: C++, openFrameworks, Khiva, ArrayFire, Essentia, Python (for prototyping)

My roles: Software Development, UX Design, Data Analysis

Conceptronica (2019)

with Charles Holbrow and Manaswi Mishra

An attempt at generating reviews and album art automatically from audio using neural networks. Uses MARD from MTG. Finetunes a language model and prompts it with words derived from audio features, and conditions a GAN on audio features, with very limited success (but virtually unlimited amusement). Done as a project in MAS S68 Fall 2019. Code and samples forthcoming, but for now a review snippet (doesn’t match the input much, but quite fun to read I think):

This cd does not just put you in the mix. It makes you think about life, take you on a wonderful ride, meditate on this, get you down in this and… you’ll do it! I love this cd, and thank you Amazon for having the amazing talent and variety on this cd.Just how great Moon Records are for making great albums and for being able to get them on CD is also one of the reasons why I liked this disc the most. The fact that this could be a cheaper import CD just makes it even more enticing. There are no liner notes or anything but just short bio on the band. A great addition to anyone’s Moon Records library.As for Moon Records, they are a veteran outfit led by the great voice of John Cage who is too busy doing remakes and re-recordings to mention so I’ll focus on their records. Luther Vandross had the Masterpuppets in 1970 and Led Zeppelin, Paul Butterfield was the real genius of the 60’s, “Byronic” was the marriage of Miles Davis and Bird with Chick Corea. Roy Orbison was their self-tourmaster of the 1970s. from the 70’s Paul Vandross was an unhappy man who went through the 90’s and Ray Charles to the advent of the 90’s Ray Barrett Crudupnick, Peas was the jealous master of the wheel. would pull together. together, Lennon broke up his Red Hotdue to his story with Bird was the 60’s with Mel Lewis & an original with George Jones and the late 60’s African Wind and Roy Eldridge had two stellar albums. had a great sides from Moby and Muddy Waters, Buggie from Golden soulful The Flies. Terence Crandell played a bit while Beck caught a back in his rock break thru Blues Kansas City with Slim. These are recorded in the mid 70’s Funk House era.

Tools used: Python, Keras, NLTK, Transformers, GPT2, Essentia

My roles: Software Development, Data Analysis

The AudioObservatory (2019)

A system that takes a large number of audio files, analyzes them for a number of feature trajectories, computes distance matrices based on these features (using dtw for example), and then creates a weighted combination of these to use in embedding the audio content in a 3D space. This combination can be updated to match user interest, resulting in a reorganization of the material It also defines a number of interactions to explore the space, including building probabilistic paths to guide the user navigation based on the user’s choices, and provides multimodal input methods (e.g. face-tracking, LeapMotion, MIDI controllers, etc.) to quickly have local and global access to sounds within the source collection.

This is a small part of ongoing work.

Tools used: C++, openFrameworks, Eigen (v1), Tapkee (v1), Python (v2), Scikit-learn (v2), UMAP (v2)

My roles: Software Development, UX Design, Data Analysis

Compiler Poetry (2019)

Generating poetic compiler messages. Assembled C++ error and warning messages from here, and put them together with some excerpts of Romantic poetry. Done as part of a homework assignment in MAS S68 Fall 2019. The (very small) dataset is here.

Examples beginning with “The”:

- The water cannot have a near class from the parameters

- The land feature to the same type the walks

- They handler must past: Subblics

- The coonthing born is not an end of setter;

- The was worst though flowers handlefer clan

- The design daacking list address too large

Tools used: Python, textgenrnn

My roles: Data Aggregation and Analysis

A Pedagogy of Noise (2019)

A detailed approach to “playing with sound” in educational environments, including a node-based graphical software environment to enable young learners to explore sound on the internet, designs for learning environments specifically geared towards sound to maximize learner agency and group activity, and to preserve audio quality, and also creative prompts to drive sonic exploration. The software, space, and activities were described to be inter-related. A short excerpt of the software platform is shown here.

My roles: Learning and Space Design, Software Development

SensorTonnetz (2019)

A physical, sensor-driven version of the musical Tonnetz, as often used as an analytic tool in Neo-Riemannian theory.

My roles: Hardware Design, Circuit Design, Software Development

Scream (2018)

Scream (2018) is an audiovisual installation that uses face-tracking as an input to control an instrument built from the audio portion of The Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS) by Livingstone & Russo (licensed under CC BY-NA-SC 4.0). Facial features and gestures are used to construct textures from the database using Corpus-Based Concatenative Synthesis. This work is part of an ongoing series exploring artistic applications of audio datasets, bodily interfaces to recorded sound, and new visual and physical interfaces to the exploration of sound corpuses.

Scream uses perhaps the most primal sound-making device, the mouth, in a silent, visual capacity. It creates a one-to-many mapping in terms of people, allowing the gestures of one person to be expressed through the voices of many. The installation’s title doubles as an instruction, encouraging participants to scream, for whatever reason they may want to, through the voices of others without needing to make any sound acoustically.

My roles: Installation Design, Software Development, Music Composition

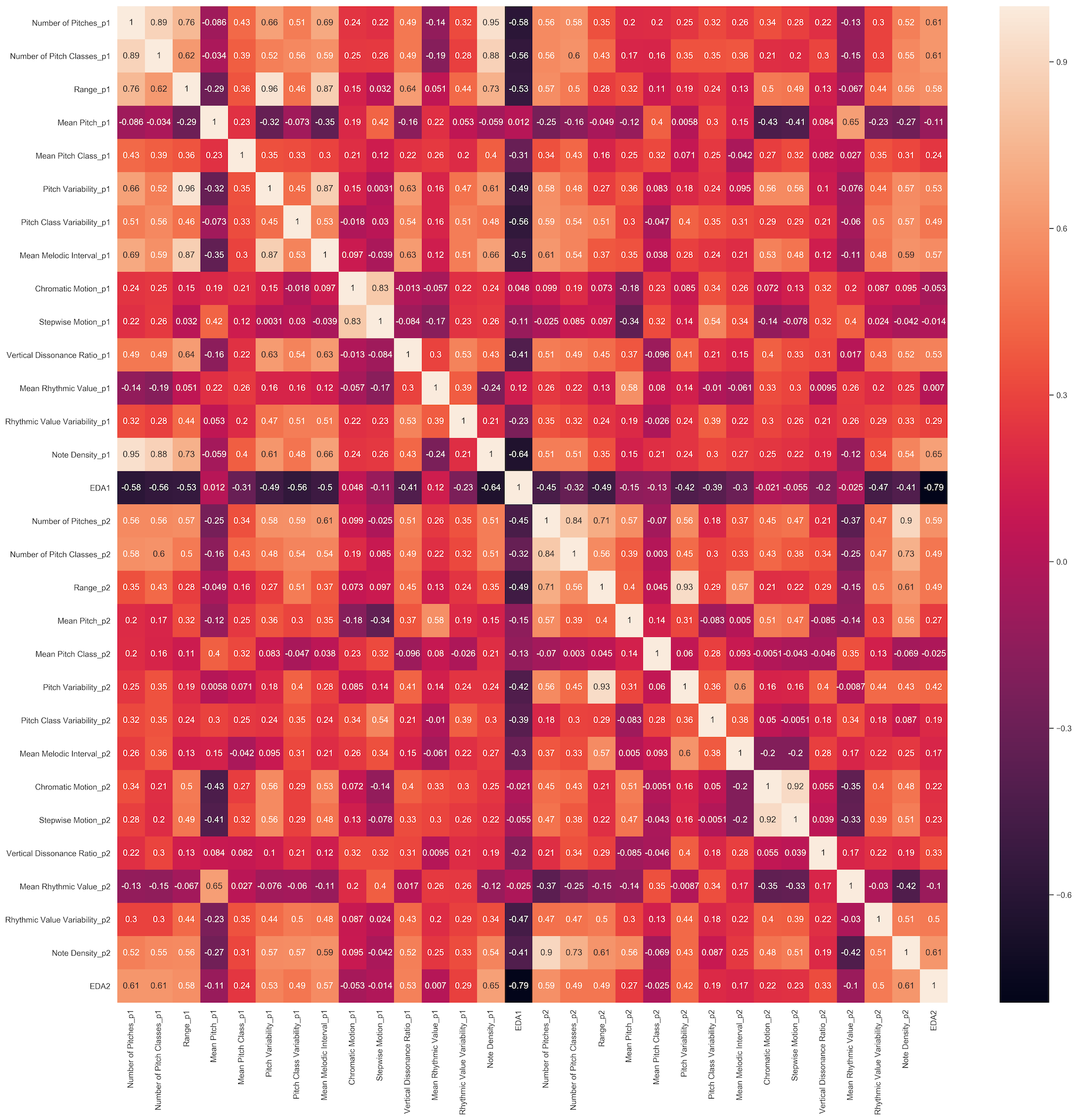

Affective-Musical Synchrony in Jazz Improvisation Contexts (2018+)

This study seeks to find relationships between physiological data obtained from jazz musicians improvising together, and relationships between analyses of their musical performances, and then look at patterns in the resulting data to help to understand some of the mechanisms at play in collective improvisation situations. EDA data was recorded from pairs of Jazz musicians performing a well-known Jazz standard together, along with their performances themselves. Features were extracted from these performances and correlated against the EDA data and also each other.

A number of inquiries into the process of music-making have focused on individual models of creativity and expression. Perhaps this comes from the image of the lone genius, working to create in isolation, whose work is nonetheless observed socially. This may be especially true, in the world of classical music, wherein the composer works at their own pace to produce novel music, and the performers work in real-time to interpretively disseminate a creative product. This paradigm identifies two distinctions between composition and performance; one, that composition (in which the creative product is synthesized) is usually a solitary task whereas performance may be social and require collaborative activity, and two, that composition is often done “offline” in the sense that it may take a considerable amount of time per unit of musical time generated, whereas interpretation is a real-time task. Nilsson (2011) refers to this chronological distinction as being between “design time” and “play time”, with the former concerning “conception, representation, and articulation of ideas and knowledge outside of chronological time” (Nilsson 2011), and the latter dealing with “bodily activity, interaction, and embodied knowledge” (Nilsson 2011). In Jazz contexts, designed structures and patterns are the foundation for real-time decision-making in which the separation of composition and interpretation is less clear. This real-time decision-making, or “spontaneous creativity”, may, as McPherson and Limb (2013) note, make Jazz particularly fertile ground for scientific approaches to the study of creativity. As such, the creative process in Jazz improvisation contexts can be seen perhaps as interpolating both chronologically and socially between the categories of composition and interpretation, and when people improvise together with shared vocabulary rooted in the tradition, this creative process may be seen better as a shared co-experience. For these reasons, collaborative improvisation in the Jazz tradition has been chosen as a foundation upon which to use methods from the field of affective computing to explore synchronous creativity.

N.B. This is an ongoing study, started as a final project in MAS.630 Affective Computing at the Media Lab.

Nilsson, Per Anders. “A Field of Possibilities: Designing and Playing Digital Musical Instruments.” Diss. Gothenburg: University of Gothenburg, 2011.

Mcpherson, Malinda, and Charles J. Limb. “Difficulties in the Neuroscience of Creativity: Jazz Improvisation and the Scientific Method.” Annals of the New York Academy of Sciences, vol. 1303, no. 1, 2013, pp. 80–83., doi:10.1111/nyas.12174.

My roles: Study Design, Data Analysis

NYC Sounds (2018)

A visual interface to explore sounds aggregated from the internet that have been geotagged. Uses granular synthesis and maps street length to grain length.

My roles: Software Development, UX Design

Schoenberg in Hollywood (Tod Machover Opera) (2018)

Schoenberg in Hollywood is an opera by Tod Machover that premiered in November 2018, with four performances by the Boston Lyric Opera.

Press: The Boston Globe, WBUR

My roles: Audio Engineering

ObjectSounds (2018)

ObjectSounds uses object detection as an interface to sound exploration. It uses detected object names to search Freesound.org and retrieve arbitrarily selected but related sounds recorded by other users. It is intended to offer an expression of the latent sonic potential in a scene.

(Demo video coming soon).

My roles: System Design, Software Development

FreesoundKit (2018)

FreesoundKit is a Swift client API for Freesound (in progress).

My roles: API Design, Software Development

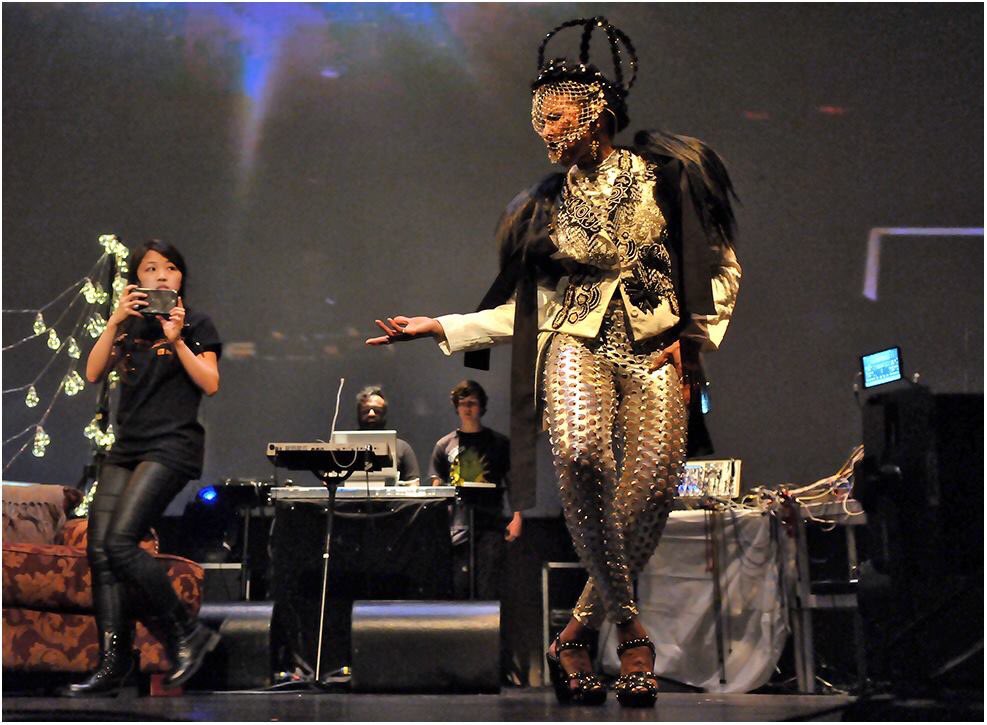

A Rose Out of Concrete (Boston Conservatory Ballet) (2018)

A Rose Out of Concrete is an “Afrofuturistic multidiscplinary” dance project by Nona Hendryx, Hank Shocklee, Duane Lee Holland Jr., and Dr. Richard Boulanger as part of the Boston Conservatory at Berklee’s Limitless show (April 2018).

Video:

Press: The Bay State Banner

My roles: Software Development, Show Control

MusicianKit (2017)

MusicianKit provides a simple API for musical composition, investigation, and more. It doesn’t intend to produce audio, but to provide tools with which to construct and work with musical ideas, sequences, and to gather information about the ways in which musical iOS apps are being used through a kind of distributed corpus analysis, where the corpus is a living collection of music being made by iOS musickers.

It is currently essentially in pre-alpha mode.

My roles: API Design, Software Development

csPerformer (2017)

csPerformer was my undergraduate thesis project in the Electronic Production and Design department at Berklee. Essentially, it’s a prototype for a system that is intended to facilitate composition through performance, or composition in a performative manner. It does this by allowing you to sing, play, or otherwise make pitches and sounds into the system to give yourself materials to compose with in real-time. In addition to acting as compositional interface for performers, it is intended to bring physicality to compositional actions, or to allow compositional decisions to be expressed physically.

My roles: System Design, Software Development, Music Composition

Soundscaper (2017)

Soundscaper is an approach to placing and distributing music and sound in physical space in a way that can be interacted with, using augmented reality.

It presents a model, inspired by interactions more common to the visual arts, for musical and multimodal composition, as well as for user interactions with music, sound, and space that extend contemporary practices in these media. It is spatialization not in the sense that the origins of sound are distributed in space, but that the experience of them is instead.

My roles: System Design, Software Development

Twitter Ensemble (2017)

The Twitter Ensemble is a generative musical system that interprets tweets related to music in real-time.

My roles: Installation Design, Software Development, Music Composition

The Sound of Dreaming (2017)

The Sound of Dreaming is a musical-technological-theatrical work written by and starring Nona Hendryx and Dr. Richard Boulanger. In it, a researcher seeks to steal the voice of a celebrated singer and infuse his machines with it, in order to become invincible, and a variety of ideas are explored along the way. It has been presented twice, once at Moogfest in Durham, NC on May 19 2017, and three months later at Mass MoCA’s Hunter Center as part of Nick Cave’s Until in North Adams, MA on August 19, 2017.

My roles: Software Development, Music Production, Guitar/Laptop Performance, Show Control

Berklee Music Video: Kidzapalooza (2017)

As part of an interactive audiovisual experience designed for children, to be presented at Kidzapalooza (at Lollapalooza Chicago) 2017, I designed and built a generative musical system that allowed children to play convincingly in a few popular styles of music using a number of hardware interfaces.

The visual and lighting system, and the concept, are by Blake Adelman.

My roles: Musical System Design, Software Development, Music Production

Songs to Joannes VII (from Speech Studies) (2017)

This movement is a setting of the seventh poem in Songs to Joannes (sometimes also Love Songs), by the British poet, feminist, futurist, and writer Mina Loy. Although VII is sometimes overlooked in the series, it contains a brief but subtly intimate description of a lover, and maintains a close symbolic connection with the poetry that surrounds it. My setting is an interpretation, semantic, semiotic, syntactic, structural, and sonic, of the world of the poem, and a sensual expression of synthetic sonic materials and their interaction with organic sonic materials.

My roles: Music Composition

Passacaglia for 30 Lazy Guitars (2016)

Passacaglia for 30 Lazy Guitars explores the cultural image of the electric guitar, its potentials as a device for sound-making and noise-making, its physicality as a material to be played with, and its sonic presence considered aside from its constructed “aesthetic of virtuosity”. All constituent sounds come from a prepared electric guitar.

My roles: Music Composition, Visual Design