ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia 2008), Vol. 27, No. 5, Article 134 (9 pages), 2008.

CG Paper Grand Prix presented by The Society for Art and Science, Japan.

|

|

|

|

| Lens with RGB filters | Captured image | Extracted depth map | Extracted alpha matte |

| From a single photograph captured through a color-filtered aperture, its depth map and alpha matte can be extracted automatically. | |||

|

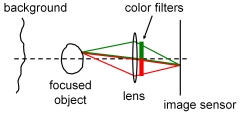

For a scene point at the focused depth, light rays in the Red band and those in the Green band (the Blue band is omitted for 2D illustration) converge to the same point on the image sensor. |

|

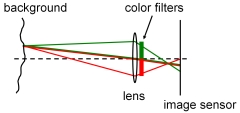

For a scene point off the focused depth, light rays in the two bands reach different positions on the image sensor, resulting in a color shift (resulting from geometric optics, not from chromatic aberration). |

|

As a result, a scene point farther than the focused depth is observed with

We exploit these depth-dependent color misalignment cues for depth and matte extraction. |

Demonstration of our color-filtered aperture photography with various applications including:

- Reconstruction of color-aligned images

- Interactive novel view synthesis

- Interactive post-exposure refocusing

- Composition over different backgrounds

- Video matting

50-sec presentation in the fast-forward session.

Results for photos taken at the conference venue.

To those who are interested in exploring/reproducing coded/color-filtered aperture techniques.

C source code of the color-filtered aperture-based depth estimation.