Coded Time of Flight Cameras: Sparse Deconvolution to Address Multipath

Interference and Recover Time Profiles

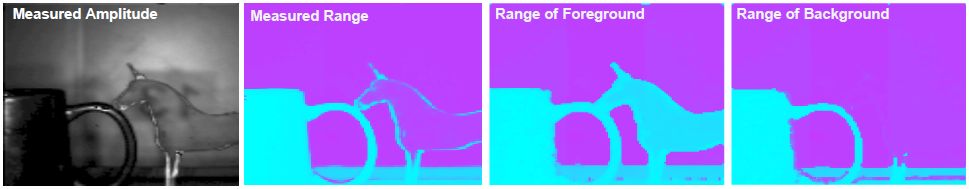

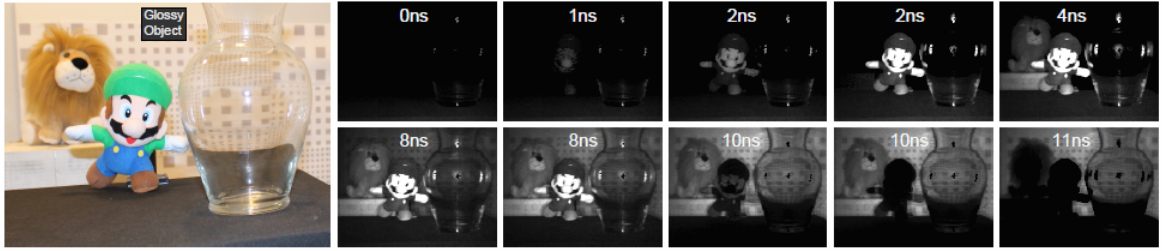

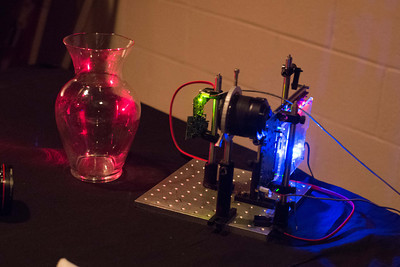

Figure 1: Using our custom time of flight camera, we are able to visualize light sweeping over the scene. In this scene, multipath effects

can be seen in the glass vase. In the early time-slots, bright spots are formed from the specularities on the glass. Light then sweeps over the

other objects on the scene and finally hits the back wall, where it can also be seen through the glass vase (8ns). Light leaves, first from the

specularities (8-10ns), then from the stuffed animals. The time slots correspond to the true geometry of the scene (light travels 1 foot in a

nanosecond, times are for round-trip). Please see http://media.mit.edu/~achoo/nanophotography for animated light sweep movies.

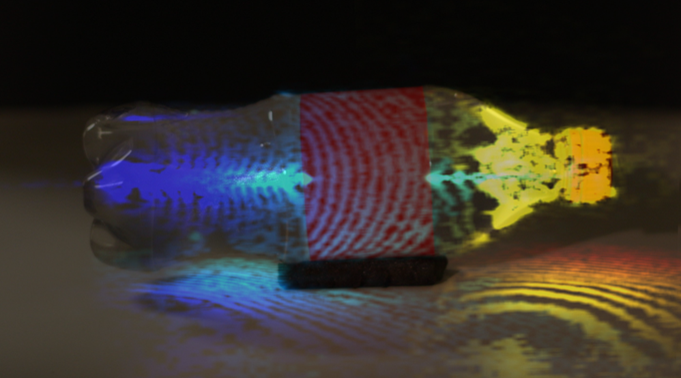

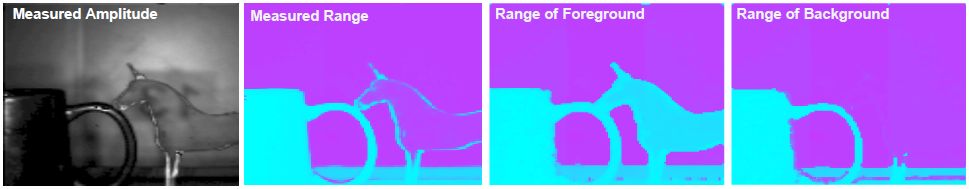

Figure 2: Recovering depth of transparent objects is a hard problem in general and has yet to be solved for Time of Flight cameras. A

glass unicorn is placed in a scene with a wall behind (left). A regular time of flight camera fails to resolve the correct depth of the unicorn

(center-left). By using our multipath algorithm, we are able to obtain the depth of foreground (center-right) or of background (right).

|

|

Light Sweep Movies

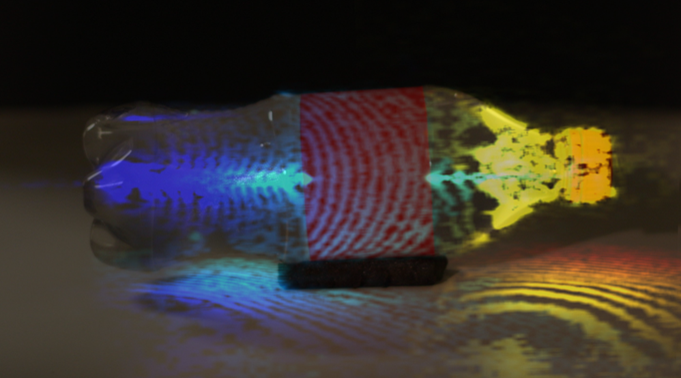

Observe light sweeping over the glass vase first, then over the Mario doll, Lion and wall in sequence. Note that the specularities on the glass

vase disappear first and the multipath interactions of the wall and glass vase.

Additional Movies for Direct Download: [URL]

Paper

Coded Time of Flight Cameras: Sparse Deconvolution to Address Multipath Interference and Recover Time Profiles, SIGGRAPH Asia 2013. [PDF]

|

|

|

Abstract

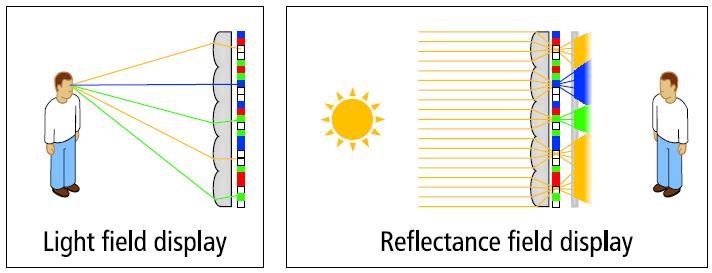

Time of flight cameras produce real-time range maps at a relatively

low cost using continuous wave amplitude modulation and demodulation.

However, they are geared to measure range (or phase) for

a single reflected bounce of light and suffer from systematic errors

due to multipath interference.

We re-purpose the conventional time of flight device for a new goal:

to recover per-pixel sparse time profiles expressed as a sequence of

impulses. With this modification, we show that we can not only address

multipath interference but also enable new applications such

as recovering depth of near-transparent surfaces, looking through

diffusers and creating time-profile movies of sweeping light.

Our key idea is to formulate the forward amplitude modulated light

propagation as a convolution with custom codes, record samples

by introducing a simple sequence of electronic time delays, and

perform sparse deconvolution to recover sequences of Diracs that

correspond to multipath returns. Applications to computer vision

include ranging of near-transparent objects and subsurface imaging

through diffusers. Our low cost prototype may lead to new insights

regarding forward and inverse problems in light transport.

Files

Citation

@article{kadambi2013coded,

author = {author={A. Kadambi and R. Whyte and A. Bhandari and L. Streeter and C. Barsi and A. Dorrington and R. Raskar}},

title = {{Coded time of flight cameras: sparse deconvolution to address multipath interference and recover time profiles}},

journal = {ACM Transactions on Graphics (TOG)},

volume = {32},

number = {6},

year = {2013},

publisher = {ACM},

pages = {167},

}

Kadambi, A., Whyte, R., Bhandari, A., Streeter, L., Barsi, C., Dorrington, A., & Raskar, R. (2013). Coded time of flight cameras: sparse deconvolution to address multipath interference and recover time profiles. ACM Transactions on Graphics (TOG), 32(6), 167.

Related Work

- Femtophotography: Velten, Andreas, et al. "Femto-photography: Capturing and visualizing the propagation of light." ACM Trans. Graph 32 (2013).

- Low Budget Transient Imaging: Heide, Felix, et al. "Low-budget Transient Imaging using Photonic Mixer Devices." Technical Paper to appear at SIGGRAPH 2013 (2013).

- Multifrequency Range Imaging: Payne, Andrew D., et al. "Multiple frequency range imaging to remove measurement ambiguity." (2009).

- Looking Around the Corner: Velten, Andreas, et al. "Recovering three-dimensional shape around a corner using ultrafast time-of-flight imaging." Nature Communications 3 (2012): 745.

- Early Beginnings of Time Resolved: R Raskar and J Davis, "5d time-light transport matrix: What can we reason about scene properties", Mar 2008

Reproducible Experiments

Newer components are available to build an improved version of the prototype detailed in a paper. It is also cheaper due to the reduced cost of components in today's market.

Please contact us if you would like to build the nanophotography setup.

Contact

For technical details contact:

Achuta Kadambi:

achoo@mit.edu

Acknowledgments

We thank the reviewers for valuable feedback

and the following people for key insights: Micha Feigin, Dan

Raviv, Daniel Tokunaga, John Werner, Belen Masia and Diego Gutierrez. We

thank the Camera Culture group at MIT for their support. Ramesh

Raskar was supported by an Alfred P. Sloan Research Fellowship

and a DARPA Young Faculty Award. Achuta Kadambi was supported by

a Draper Laboratory Fellowship.

Frequently Asked Questions (FAQ)

What is nanophotograpy?

Nanophotography is a new technique that exploits coded, continuous wave illumination, where the illumination is strobed at nanosecond periods.

The innovation is grounded in a mathematical technique called "sparse coding" that applies to optical paths. The end result: where a conventional camera measures only

a single depth at a pixel, the nanocamera is a multidepth or multirange camera. One application is light sweep photography, which offers

a visualization of light propagation. Another is the ability to obtain 3D models of translucent objects.

What's a simple analogy to describe Nanophotography?

Many 3D systems are straightforward. Laser scanners, for instance, ping the scene with a pulse of light and measure the time it takes for the pulse to come back (this is also how LIDAR police

scanners work). In Nanophotography, we embed a special code into the ping, so when the light comes back we obtain additional information beyond time of arrival.

How does it compare with the state of the art?

Nanophotography is similar to the new Kinect, however, instead of probing the scene with a sinusoidal waveform, we probe the scene with a carefully chosen binary sequence that allows us to invert the optical paths.

Recovering the optical paths allows us to visualize light in addition to other applications. In the academic sphere, our group at MIT previously presented femtophotography. The femtophotography used impulse based imaging ---a femtosecond pulsed laser--- coupled with

extremely fast optics to recover the optical paths. A promising, low-cost technique has recently come from a University of British Columbia Team. They leverage continuous wave imaging,

but instead of using coded signals, they take measurements at multiple frequencies and evaluate their technique qualitatively. We detail

the advantages of coded signals --- which allows a single measurement at a single frequency --- in this paper. In addition, we provide quantitative comparisons of nanophotography's time resolution to existing

literature, including femtophotography.

How does Continuous Wave (CW) range imaging compare with Impulse based?

In impulse based imaging, a packet of photons is fired at the scene and synchronized with a timer --- the time of flight is literally measured. In

CW range imaging, a periodic signal is strobed within the exposure time and subsequent computation allows us to extract the time of flight. CW offers some

advantages, notably it does not require ultrafast hardware, and enjoys increased SNR because the signal is constantly transmitting within the exposure,

The drawbacks of CW include increased computation and multipath interference. We address the latter in the technical paper.

What is new about nanophotography?

Nanophotography, is at its heart, a paper that addresses the mixed pixel problem in time of flight range imaging. A mixed pixel occurs when multiple optical paths of light smear at a

given pixel. Think of a transparent sheet in front of a wall, which would lead to 2 measured optical paths. We propose a new solution to this problem that is a joint design between the

algorithm and hardware. In particular, we customizes the codes used in the time of flight camera to turn the sparse inverse problem from an ill-conditioned into a better-conditioned problem.

Where does the name "nanophotography" come from?

The name comes from the light source that is used. In femtophotography a femtosecond pulse of light is sent to the scene. In nanophotography a periodic signal with a period in the nanosecond range is fired at the scene. By using a demodulation scheme, we are able to perform sub-nanosecond time profile imaging.

Does the light visualization work in real-time?

To acquire the light sweep capture on a large scene, the total acquisition takes 4 seconds using research prototype hardware. Compare with a few hours for

femtophotography, and 6 hours (including scene calibration) using the UBC method. We expect the acquisition to take a fraction of a second by exploiting two key factors: (i) incremental advances in

new generations of hardware technology, and (ii) a tailored ASIC or on-chip FPGA implementation.

How will this technology transfer to the consumer?

A minor modification to commercial technology, such as Microsoft Kinect is all that is needed. In particular, the FPGA or ASIC circuit needs to be reconfigured to send custom binary sequences to the

illumination and lock-in sensor. This needs to be combined with the software to perform the inversion. In general, time of flight cameras are improving in almost every dimension including cost,

spatial resolution, time resolution, and form factor. These advances benefit the technique presented in nanophotography.

Why is this not currently used in industry?

Embedding custom codes, as we do, has pros and cons. The major cons include increased computation and ill-posed inverse problems. The former we can expect to be addressed by improvements in computational power and

the latter we overcome by using sparse priors. This research prototype might influence a trend away from sinusoidal AMCW, which has been the de facto model in many

systems beyond time of flight. More work is needed to make this production quality, but the early outlook is promising.

If the nanocamera costs $500, how do I get one of these right away?

If you want one right away, you will have to build one. All the parts can be bought off the shelf from vendors---no special order necessary. If you think you have the skillset of

a motivated undergraduate student in EE (e.g., FPGA, PCB experience), you can probably build one following the details in the technical paper. Feel free to contact any of the authors for details.

Media Coverage

- Engadget MIT's $500 Kinect-like camera works in snow, rain, gloom of night

- MIT News Office Inexpensive 'nano-camera' can operate at the speed of light

- New Scientist Camera that sees through fog could make driving safer

- GigaOM MIT camera could help avoid collisions, boost medical imaging

- The Financial Express Now, 'nano-camera' that operates at the speed of light

- Softpedia MIT Motion Sensing Camera

Can Handle Translucent Objects

- CNN Money MIT camera could help avoid collisions, boost medical imaging

- Gizmodo MIT's New $500 Kinect-Like Camera Even Works with Translucent Objects.

- Huffington Post 'Speed of Light' Camera can take 3D Pictures for $500

Additional Light Sweep Movies

Empirical validation: by using a scene with calibrated geometry, we can empirically validate the time resolution of our technique.

Images

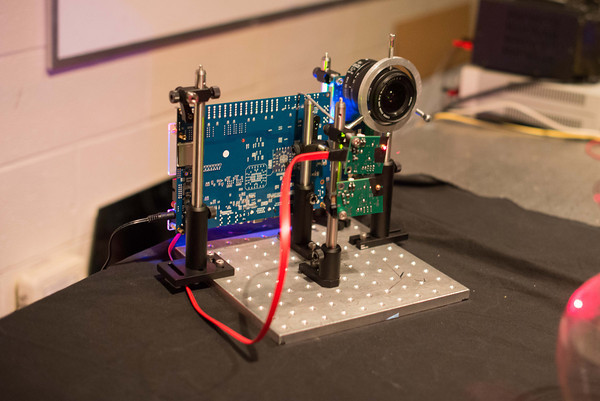

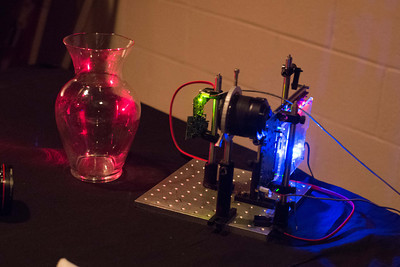

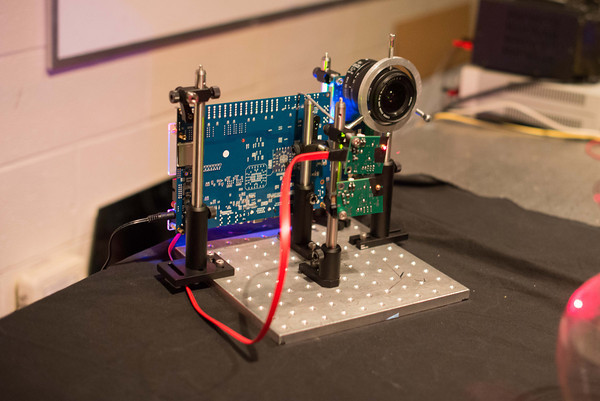

Key components, PMD 19k3 sensor, FPGA Dev Kit (student version), Custom PCB for light sources, and DSLR lens from a regular Canon SLR.

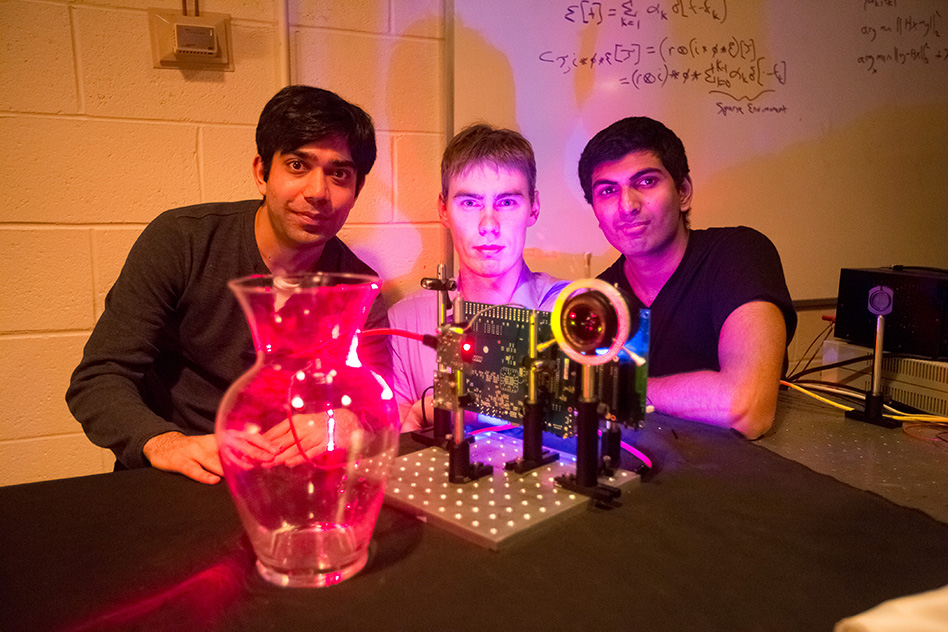

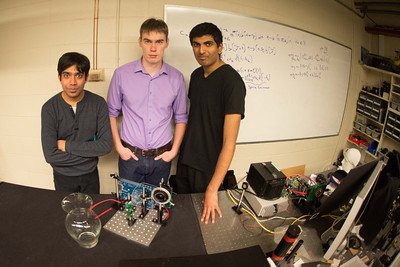

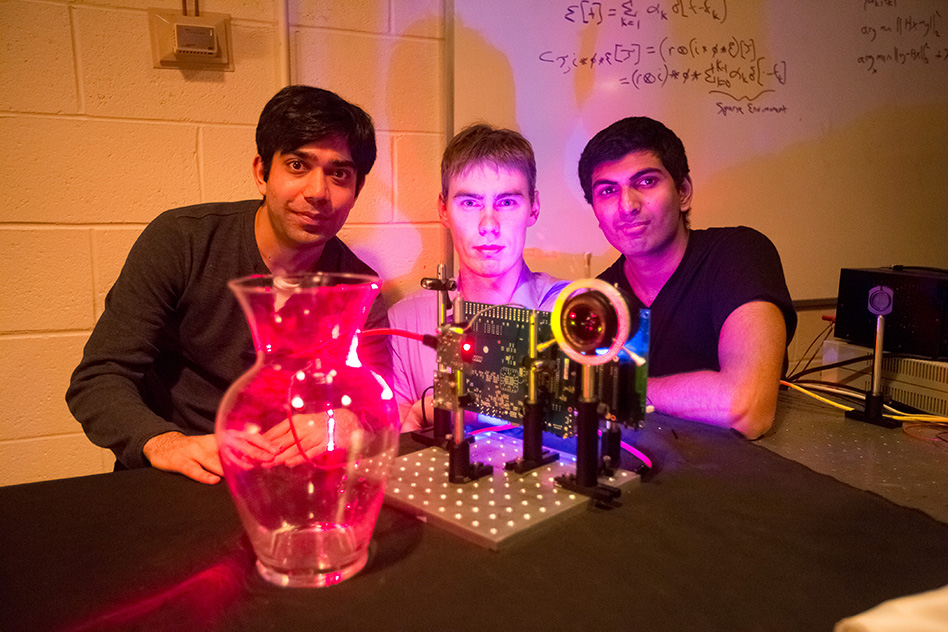

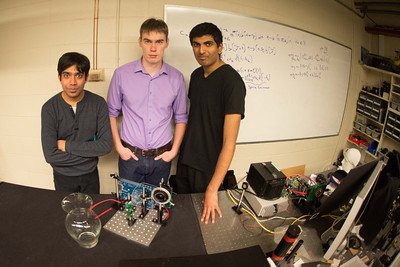

From MIT News: MIT students (left to right) Ayush Bhandari, Refael Whyte (Univ. Waikato, NZ) and Achuta Kadambi pose next to their "nano-camera" that can capture translucent objects, such as a glass vase, in 3-D.

Refael Whyte, Professor Ramesh Raskar, Achuta Kadambi, and Ayush Bhandari hold different translucent objects. Their paper demonstrates ranging through translucent media, such as these sheets.

Previous work on Femtophotography allows additional information to be captured, such as the detailed wavefront of light, but requires a laboratory setup.

A side view of the Camera imaging a vase. 3D models of this vase can now be constructed using time of flight technology.

Dr. Ramesh Raskar, Associate Professor, MIT. Senior author and principal investigator (PI) of this project.

Close up of the nano-camera on the MIT homepage (www.mit.edu).

MIT students Ayush Bhandari, Refael Whyte, and Achuta Kadambi stand with their prototype.