Animatable 3D models of humans find application in a wide range of commercial products ranging from video games to movies. Most existing methods of generating such models require either extremely high-end hardware or fail to generate the model in real-time, limiting the model's usability. The Microsoft Kinect is a relatively cheap and easily available device that is capable of capturing 3D point clouds of a scene at 30Hz. This makes it an ideal choice for applications requiring the end-user to generate 3D models in a cost-effective manner.

Most existing systems using the Kinect are designed for high-end computing hardware that is unavailable to the end user. Given the time-intensive nature of such model generation, complete real-time performance may not be feasible and partial real-time performance that requires limited interaction with the user can be seen as an acceptable compromise. Separation of the data capture and registration processes can be such an alternative that produces dense 3D models of human subjects while requiring hardly any time to be spent by the user. Additionally, such a system can provide real-time feedback to the user, providing information such as the parts of the model that have yet to be captured, capture quality as well as possible holes in the generated model.

To provide rough real-time feedback to the user, which is a must if the system is to be of any practical use, we had to find a way to "roughly" register point clouds captured without using time-intensive gradient descent methods like most point cloud registration algorithms. We decided to turn our attention to vision-based camera trackers and Simultaneous Localization and Mapping (SLAM) systems. One of the best real-time camera trackers for our purpose turned out to be Parallel Tracking and Mapping (PTAM), which was developed by the Active Vision Group at Oxford University for augmented reality applications. PTAM could be used to directly provide a rough solution to the registration problem, and was almost guaranteed to perform at real-time. Not just would this provide us with the feedback we needed, it could also be used as an initial starting point for the gradient descent problem, greatly reducing the number of iterations required to reach an acceptable fit.

The proposed system requires the user to rotate 360o round the subject and provides real-time feedback as he does so. The entire capture process is designed to take about 30 seconds. Registration of the captured point clouds takes place asynchronously in parallel, requiring an additional 10 minutes to complete. This second phase requires no interaction with the user whatsoever and can proceed asynchronously in the background.

While not perfect, the rough fit provided by PTAM is good enough for the user to judge the quality of the model. The user can easily determine which parts of the subject have not yet been captured and if the model contains any holes.

Fine adjustment, though time-consuming, does produce excellent 3D models of the subject. Meshing can now be performed on these models, rendering them ready for use in animation or virtual reality applications.

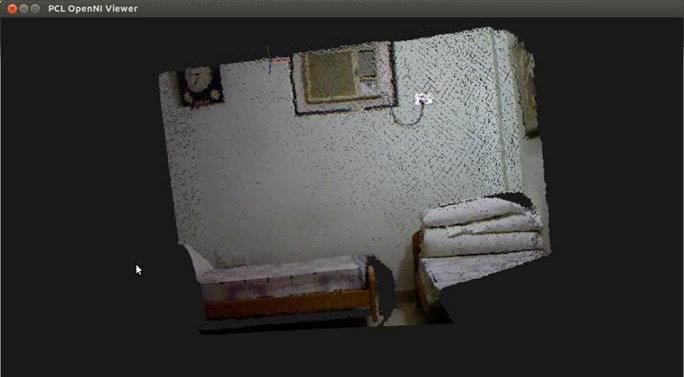

Data was captured from the Kinect using the OpenNI API. The raw data was then reorganized into point clouds, RGB and depth images. The RGB images were directly pushed to PTAM for camera tracking. It was found that the system performed best when one in every twenty point clouds was used for model generation. Increasing the capture frequency did not improve model quality significantly, while requiring processing of a lot more redundant data. Point Cloud Library (PCL) was used for handling point clouds. The system was tested on an Intel Core i7 3610QM laptop CPU with a low-end nVIDIA GT630M GPU running Ubuntu 12.04.

PTAM generates the initial map using five-point stereo. The depths computed by PTAM are scaled, assuming the displacement between the first two keyframes to be 10 cm. This scaling must be done as it is necessary to assume some initial displacement (or baseline of the stereo system). Thus, even if we make use of the available point cloud data, it is not possible to predict occlusion since only scaled depths of feature points are known. Additionally, the depths of all points added to the map after initialization are not known to a good enough accuracy to be of much use in tracking, especially when far away from the initial scene. PTAM was originally developed for "small AR workspaces", in which both of these issues do not normally arise - occlusions are minor and some portion of the initial scene is always visible. In our case, however, every capture will involve major occlusion due to the human subject and, since we rotate 360o round the subject, for a significant portion of the capture process, the initial scene is not going to be visible. These issues greatly limit the usability of PTAM for a rough initial fit, but can easily be fixed if the depth map is fed into PTAM as well, instead of just the RGB image.

The stereo initialization of PTAM, required to create the initial map can be removed altogether, reading depths directly from the depth map. As far as occlusion detection is concerned, PTAM determines tracking quality by computing the following ratio -

Iterative Closest Point (ICP) is a commonly used gradient descent method used for point cloud registration. The ICP variant we use attempts to minimize a point-to-plane error metric. This has been shown to be better in practice for point cloud registration.

Given source and target point clouds, each iteration of ICP first establishes a set of point correspondences between points in the two clouds. This is done by choosing points that would minimize the calculated error, say, for example, points in the target cloud that are closest to points in the source cloud. Every iteration of ICP returns a transformation matrix $T$, such that on transforming the source cloud using $T$ the error metric is minimized. $T$, in this case would be a 4x4 3D rigid body transformation matrix.

The point-to-plane error metric defines the error function as the sum of squared distances between each point in the source cloud and the tangent plane at its corresponding point in the target cloud. Each iteration of ICP thus attempts to reduce this error. Equivalently, if $\mathbf{s_{i}} = (s_{ix}, s_{iy}, s_{iz}, 1)^{T}$, $\mathbf{t_{i}} = (t_{ix}, t_{iy}, t_{iz}, 1)^{T}$ are points in the source and target clouds respectively and $\mathbf{n_{i}} = (n_{ix}, n_{iy}, n_{iz}, 1)^{T}$ is the unit normal vector at $\mathbf{t_{i}}$, then

We are currently working on switching to variants of ICP that are more robust to noise (such as Sparse ICP). By reformulating the ICP optimization problem using sparsity-inducing norms we hope to achieve significant noise tolerance as well as speed improvements.

In the implementation described above, all processes execute exclusively on the CPU, which is extremely inefficient given that point cloud registration is easily scalable to multiple cores. We are currently working on shifting inherently parallel routines such as ICP computation and meshing to the GPU.

Since the model is generated through pairwise registration of consecutive point clouds, on rotating 360o we sometimes face issues with closing of the loop, so to say. We are looking into global registration techniques to enforce loop closure before meshing.

We currently require about 10 minutes to provide the final model to the user. While this is still quite impressive on low-end systems, it can certainly be improved (GPU acceleration, sparse ICP etc.). Once we fully exploit these speed improvements we look to publish this work