Garment Personalization via Identity Transfer

Roy Shilkrot1, Daniel Cohen-Or2, Ariel Shamir3, Ligang Liu41MIT Media Laboratory, 2Tel-Aviv University, 3The Interdisciplinary Center, Herzliya, 4University of Science and Technology of China, Hefei

Abstract

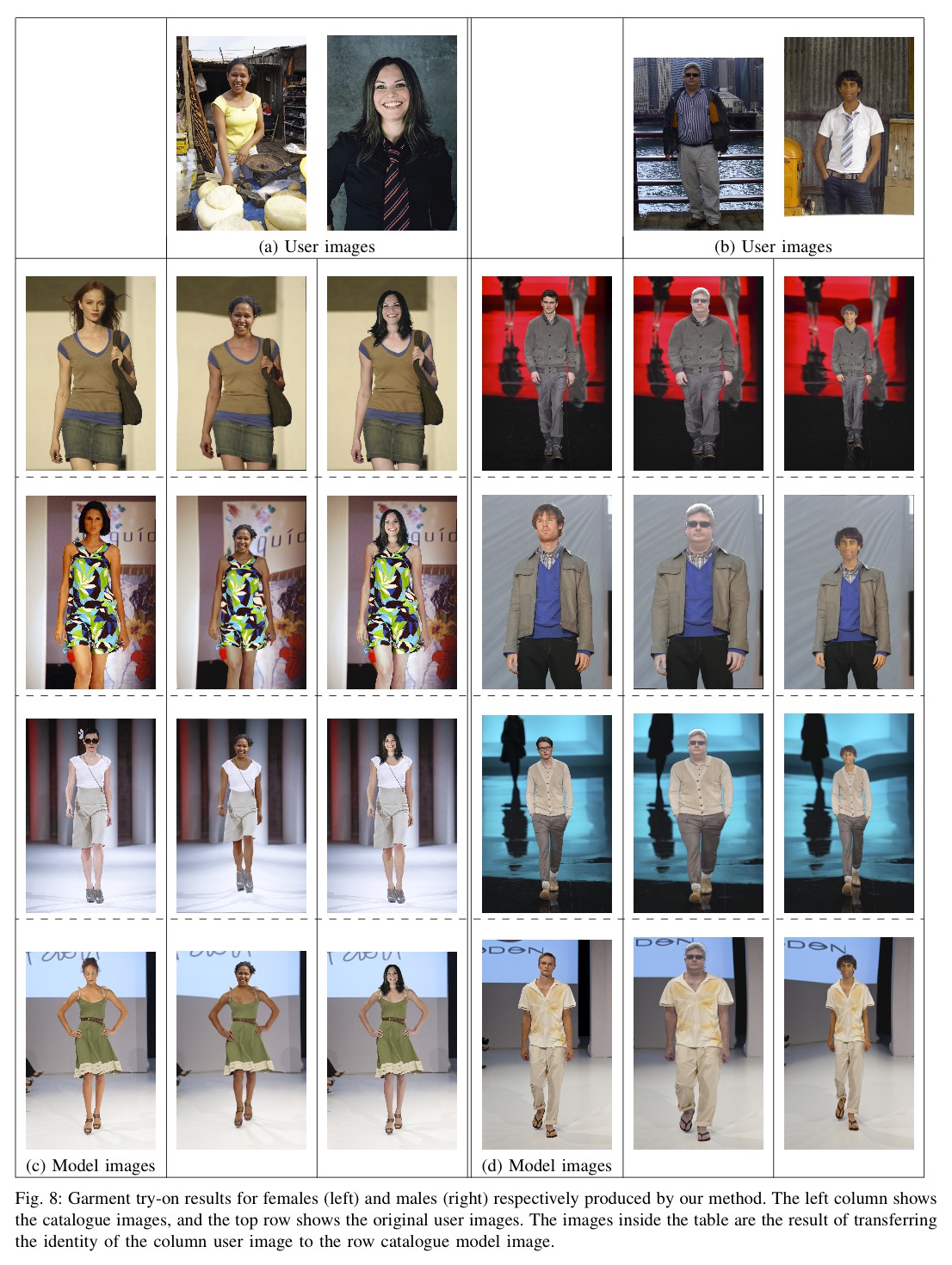

We present a method for transferring the identity of a given subject to a target image for try-on experience of clothes. The method involves cloning the user's identity into a catalogue of model images wearing the desired garments.

We present an accurate segmentation procedure for human heads that separates three semantic parts: face, hair, and background. We use a tri-kernel statistical model based on Textons and segment using graph cut.

Using an offline simple training phase the extracted head can be cloned automatically into photos of catalogue models.

The skin color is adjusted according to a statistical model, and the head is relighted using Spherical Harmonics. Lastly, the body dimensions are warped to fit the user's dimensions using a parametric model.

This creates high quality compositions imitating the identity of the user in the desired garment. We show some realistic results, and present a study that supports their quality.

Video

Results

Download

Instructions

Please follow these video instructions on how to use the application. This is the MacOS version, however the Windows version is identical.

It works best with a photo of:

It works best with a photo of:

- a frontal face

- medium resolution (around 500x500 pixels)

- a face that is at least 20% of the image, so it's pretty big and clear, however it doesn't dominate the entire photo

- the hair is clearly seen and not cut off

About

This is a work in collaboration between MIT Media Lab, Tel-Aviv University Computer Graphics Lab, The Interdisciplinary Center, Herzliya and University of Science and Technology of China, Hefei

The images in this work are licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License.