High-precision RFID Location Sensing

RFIG Lamps: Interacting with a Self-describing World via Photosensing Wireless Tags and Projectors

Presented at Siggraph 2004Ramesh Raskar, Paul Beardsley, Jeroen van Baar, Yao Wang, Paul Dietz, Johnny Lee, Darren Leigh, Thomas Willwacher

Mitsubishi Electric Research Labs

RFIG: Finding

millimeter-precise RFID location using a handheld RF reader and pocket projector

without RF collision

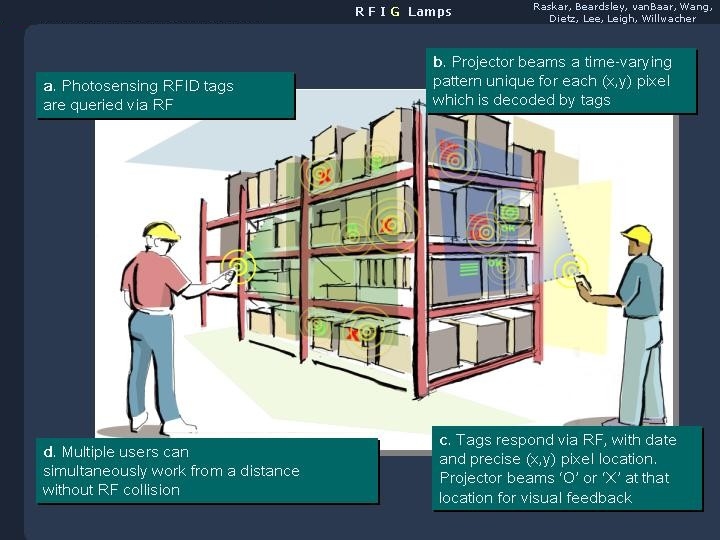

A photosensor is embedded in the RFID tag. A coded illumination via

pocket projector locates the tag.

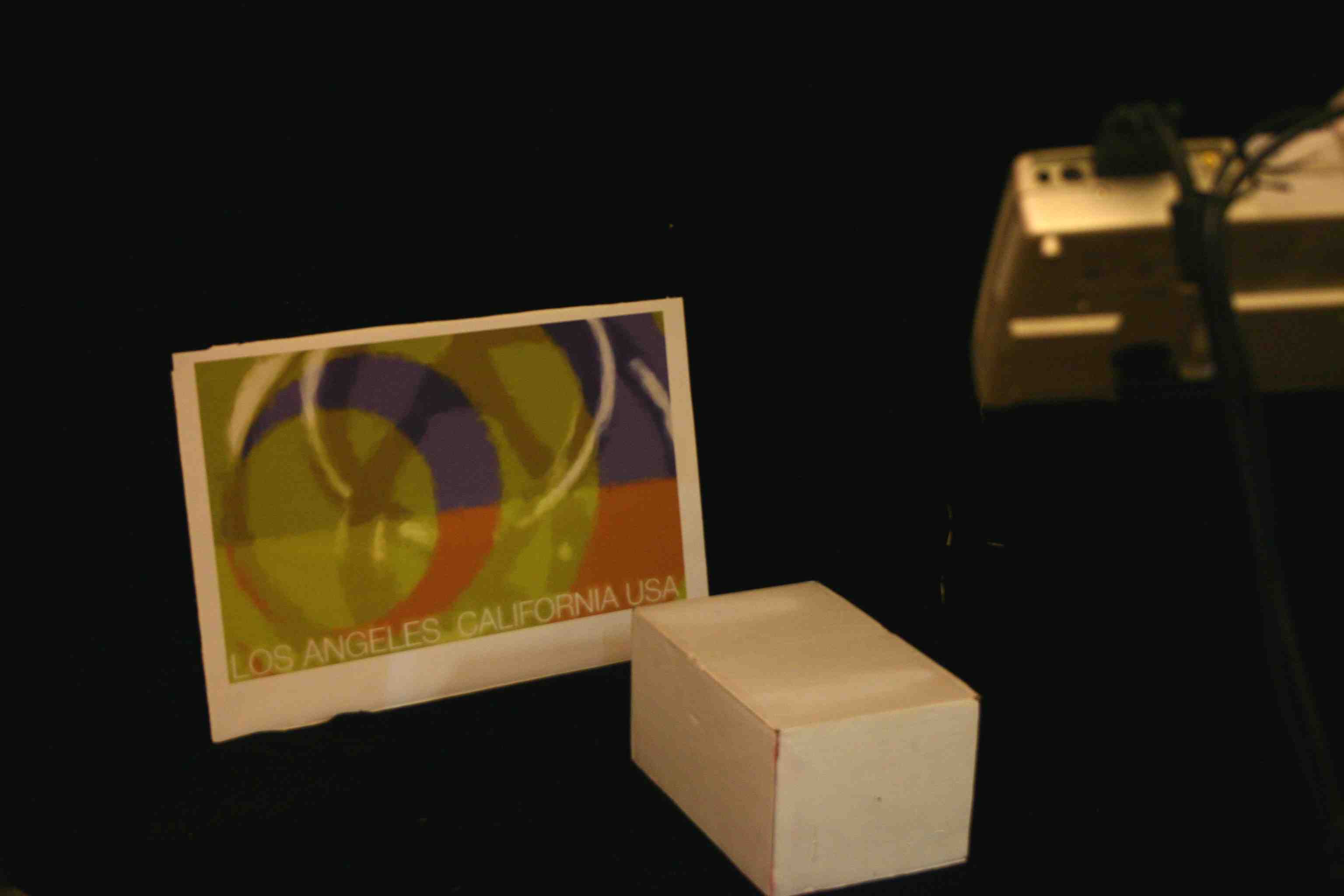

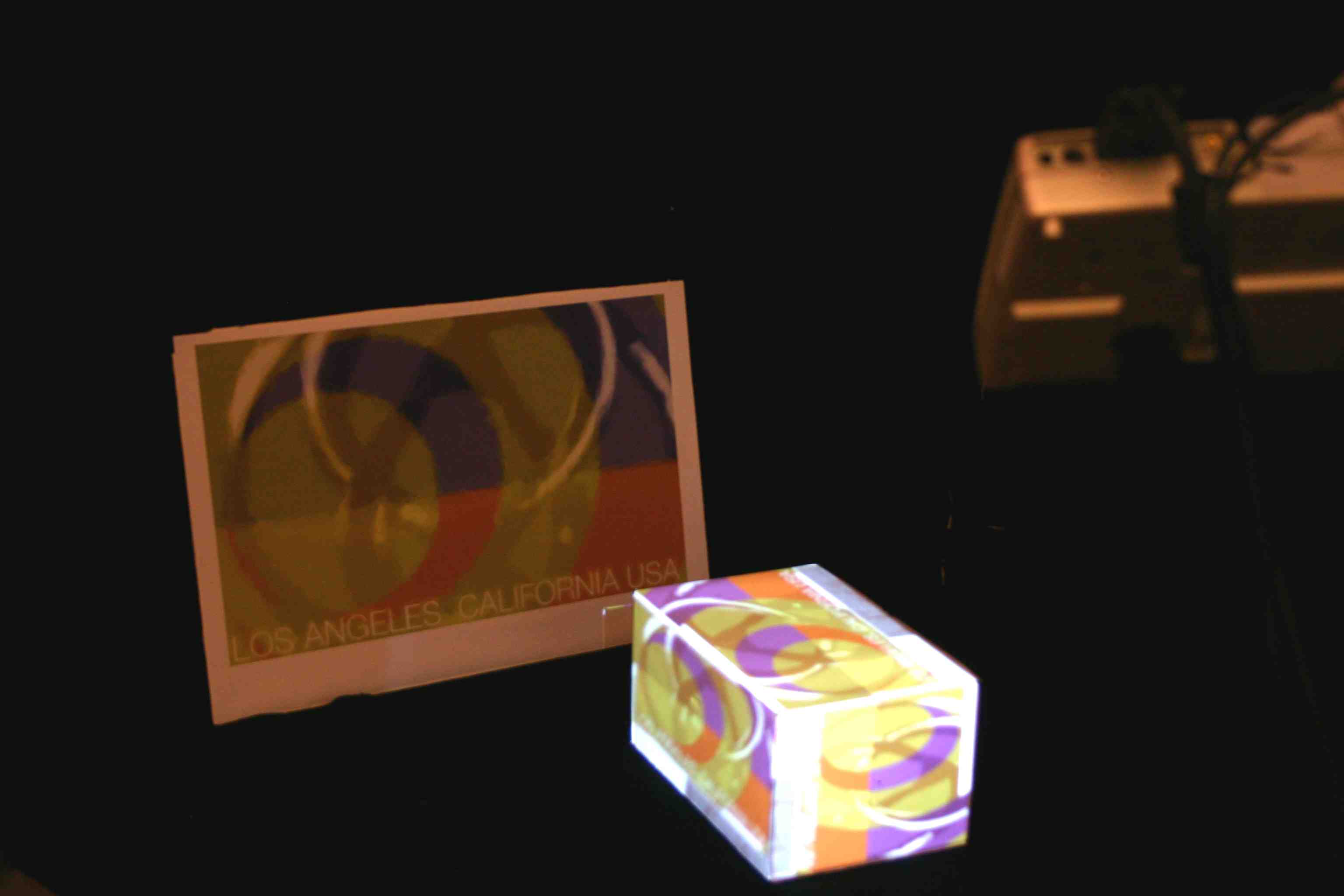

The computer generated labels are projected and overlay the object

creating augmented reality (AR).

|

We describe how to instrument the physical world so that objects become self-describing, communicating their identity, geometry, and other information such as history or user annotation. The enabling technology is a wireless tag which acts as a radio frequency identity and geometry (RFIG) transponder. We show how addition of a photo-sensor to a wireless tag significantly extends its functionality to allow geometric operations - such as finding the 3D position of a tag, or detecting change in the shape of a tagged object. Tag data is presented to the user by direct projection using a handheld locale-aware mobile projector. We introduce a novel technique that we call interactive projection to allow a user to interact with projected information e.g. to navigate or update the projected information. The work was motivated by the advent of unpowered passive-RFID, a technology that promises to have significant impact in real-world applications. We discuss how our current prototypes could evolve to passive-RFID in the future. |

+

RFIG = Radio Frequency Identity and Geometry

Geometry includes the notion of Location, Orientation, and Displacement of tags as well as the pose of reader/projector

Related Siggraph 2004 Activities and Publicity

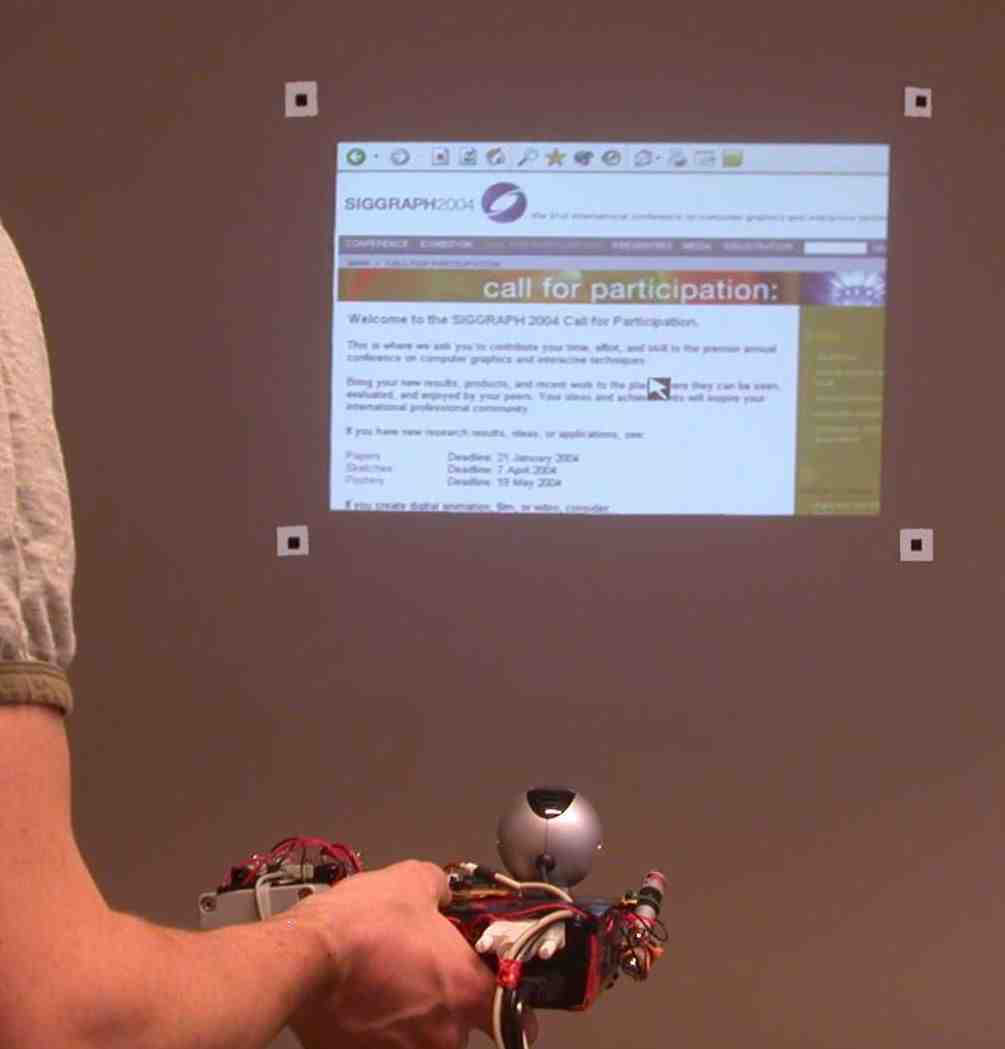

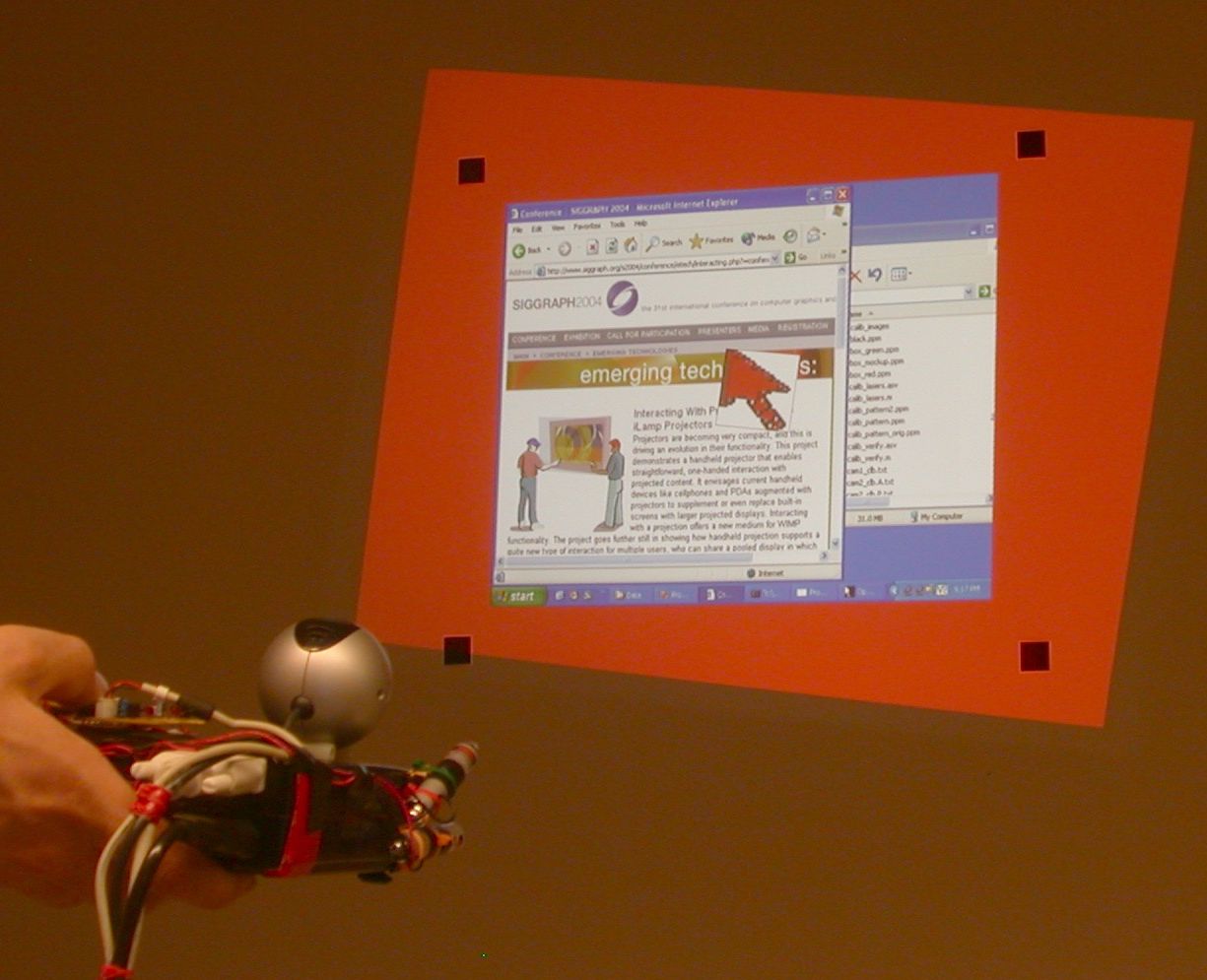

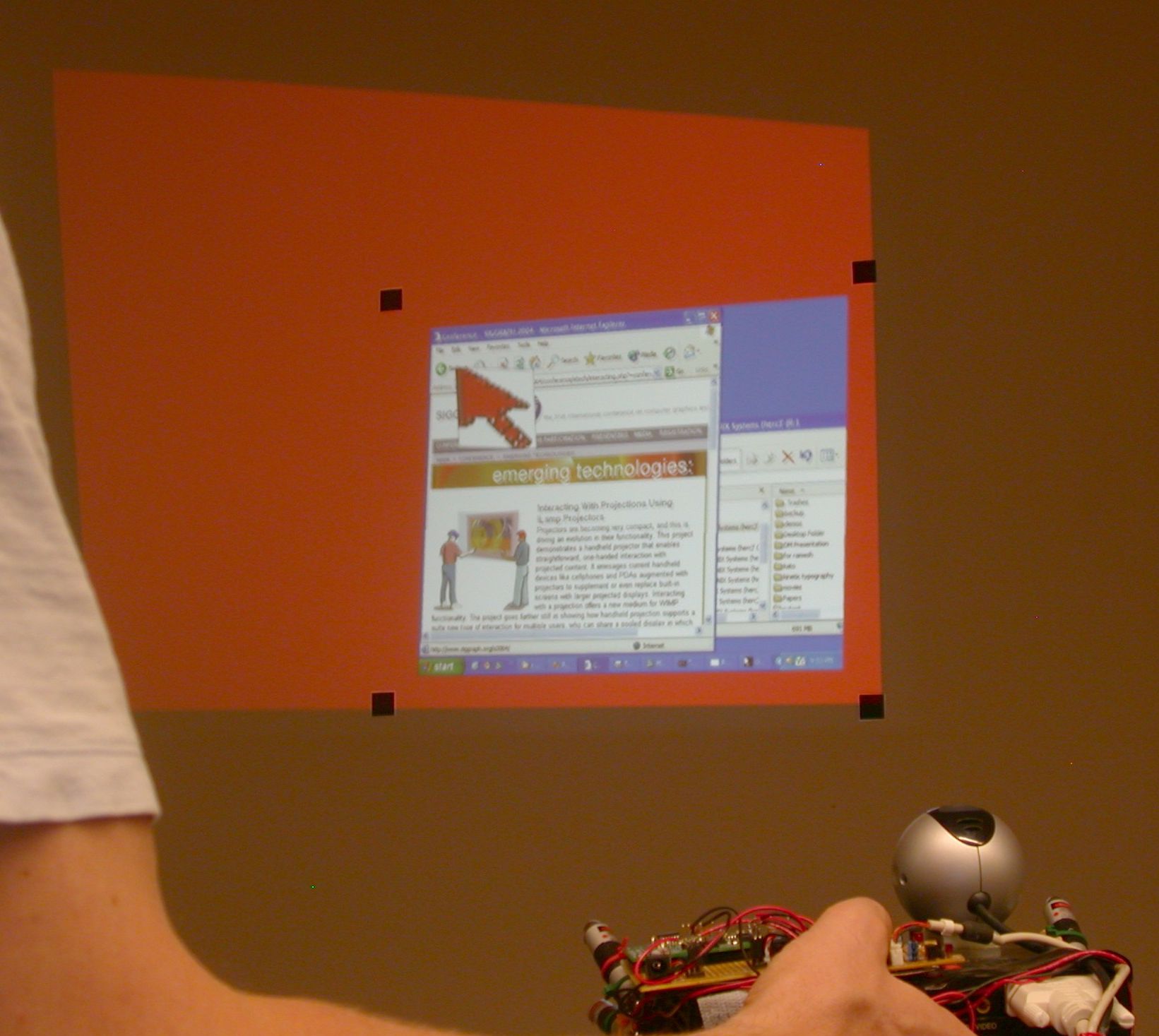

Emerging Technologies Booth showing real-time demonstration of handheld iLamps projectors

Paper Presentation, Tuesday 10:30am

E-tech Presentation, Monday 10:30am

Highlighted Paper

Course

on Advanced Projector Geometry topics, Monday 3:45pm

Siggraph 2004 paper PDF Copy | Communications of

the ACM September05 PDF Copy

Link to Video

SIGGRAPH 2003 Paper, iLamps: Geometrically Aware and Self-Configuring Projectors [Link]

Projector links [Link]

Projector mailing list [Link]

Mitsubishi Projectors [Link]

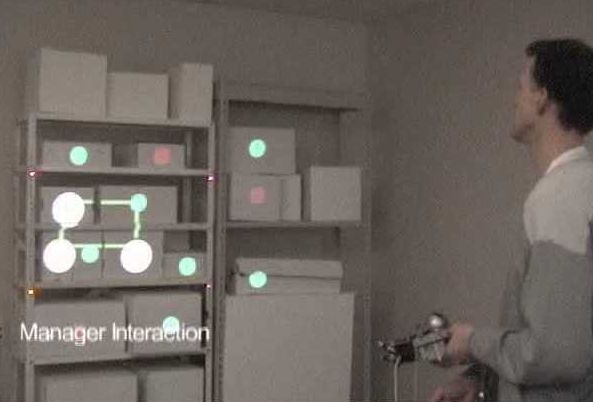

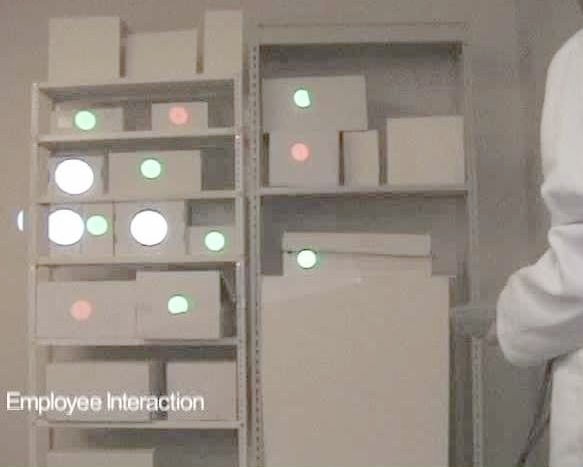

Interacting with RFID via Location tracking and Augmented Reality. The manager locates products that are about to expire and get a visual red/green feedback. The manager leaves virtual annotation (to be stored in online database). The employee later retrieves those annotations (big red circles indicate, e.g., those boxes should be thrown away) from a completely different viewpoint.

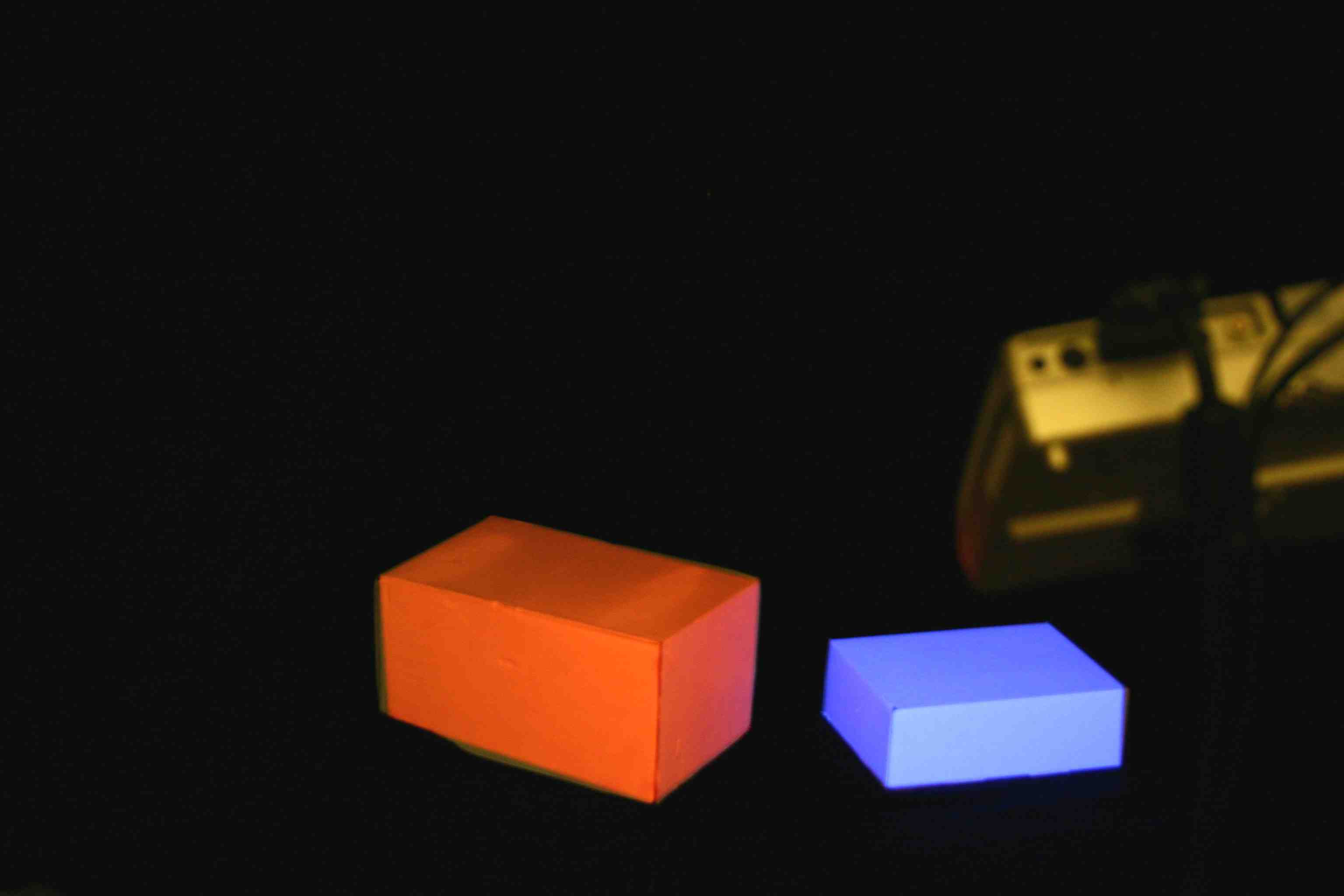

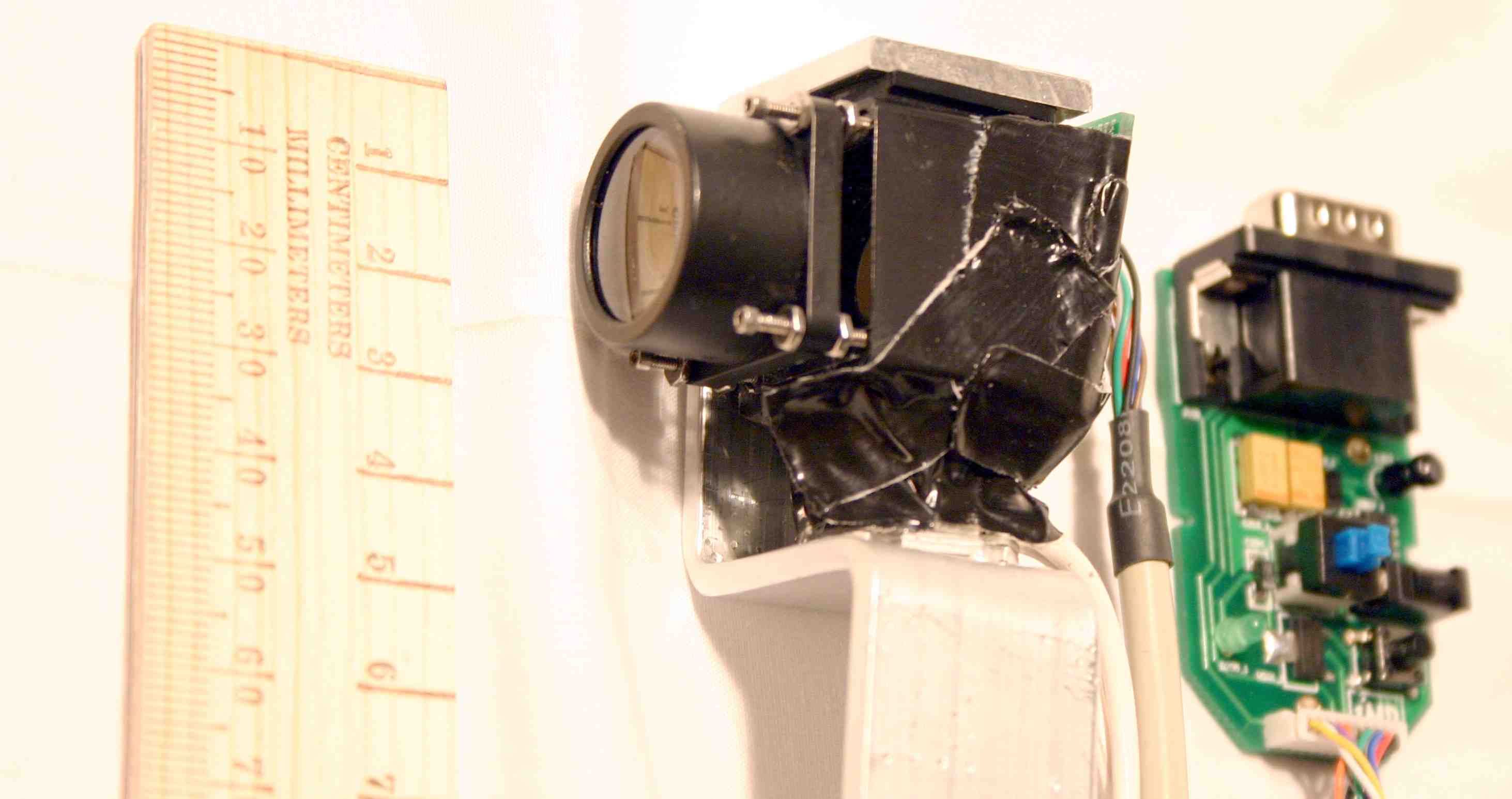

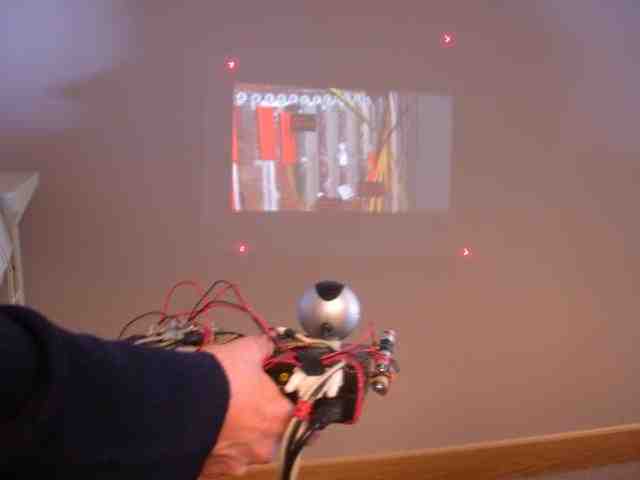

RFID Location

tracking with milimeter level accuracy. The projector beams

a temporally unique code for each pixel which is decoded by the

photosensor on the tag.

The tags can be battery-less because they detect light but do NOT

transmit any light.

Presence of light is binary 1 and absence is binary 0 in the temporal

optical code.

The tags respond via RF and transmit the (x,y) coordinate of the pixel

that illuminated them. The projector when it receives those (x,y)

coordinate from the RF reader, turns on a circle at that (x,y)

location. This gives a visual feedback. (In this picture, e.g., red

indicate boxes with products about to expire and green indicates

otherwise.)

Detecting

change from a different viewpoint

A library

scenario: Finding which books are on the shelf within the RF range is

easy with a traditional (handheld) RF reader. But how can one find out

which books are

out of the alphabetically sorted order ? With passive photosensing RFID

attached to each book we can find the exact location

of each book. So, one can verify if there is a mismatch between the

list of books sorted by title versus list of books sorted by position

coordinates. The mismatch can also be indicated by projecting the

arrows back on the shelf indicating the correct position. (Green

arrows.)

We can also find out if any book is placed upside down. We attach two

tags, one at the top and

one at the bottom. Books for which the location of the two tags is

reversed is marked as upside down. This is indicated visually with red

arrows.

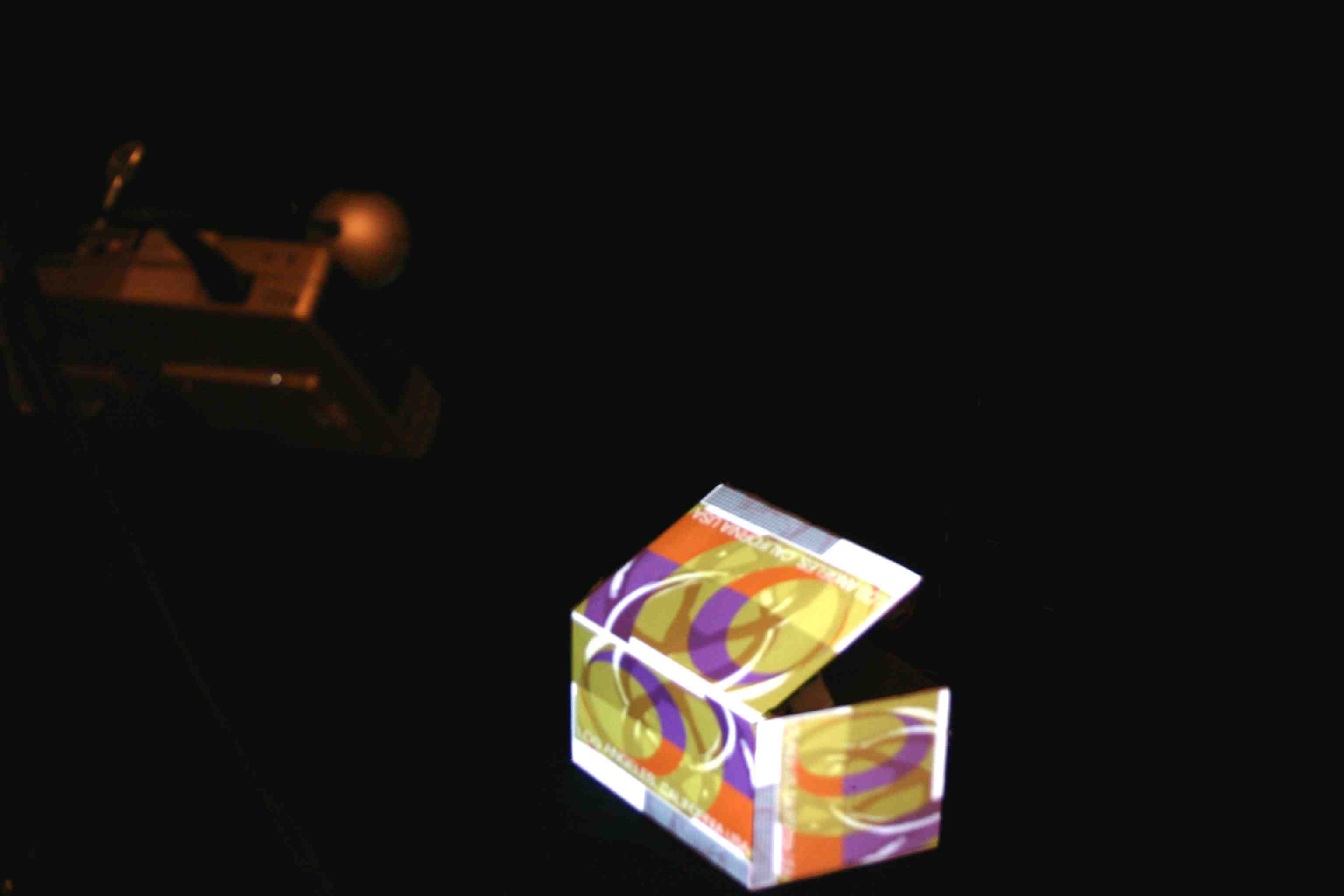

Grouping objects

Texture

adaptation and placement on moving objects

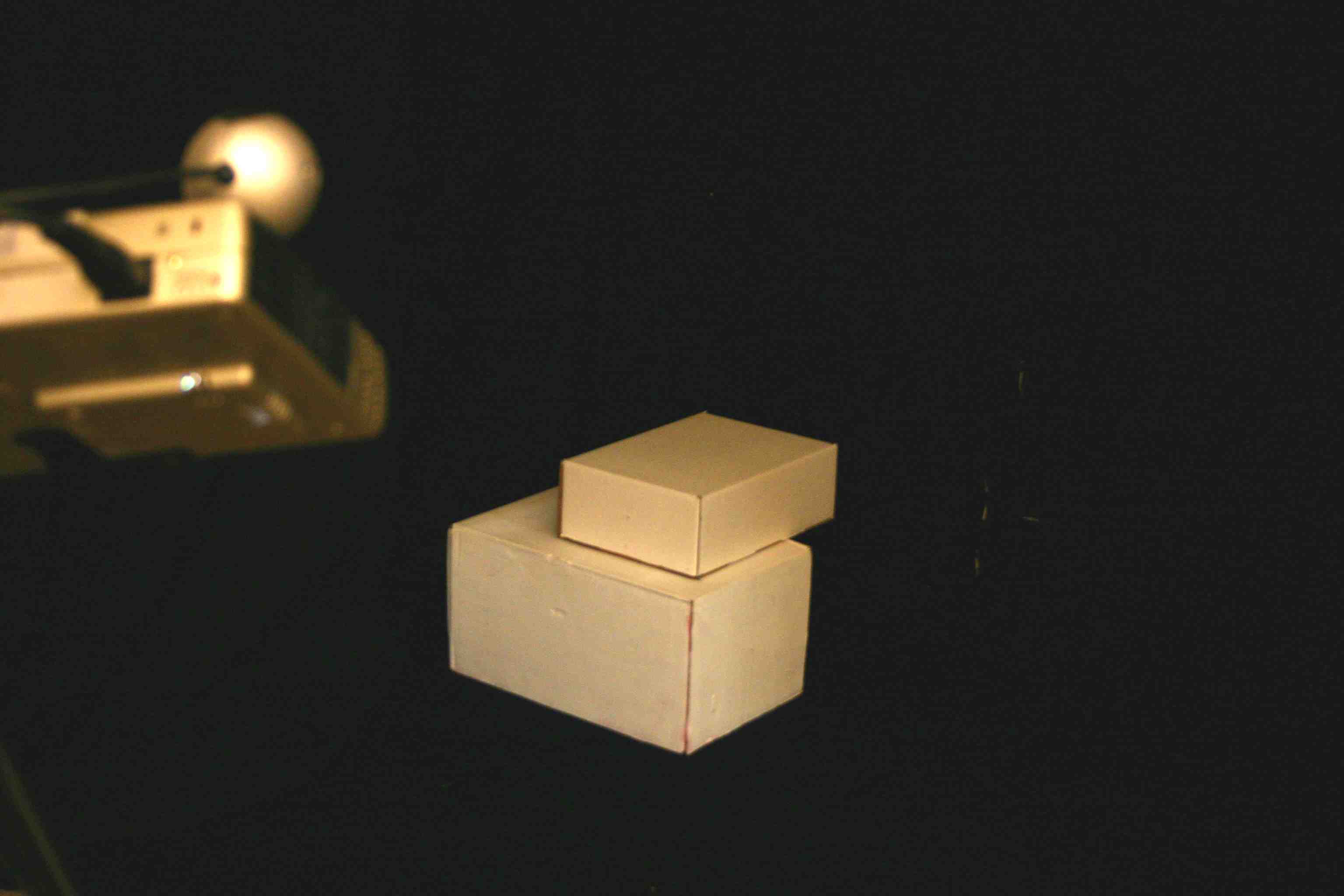

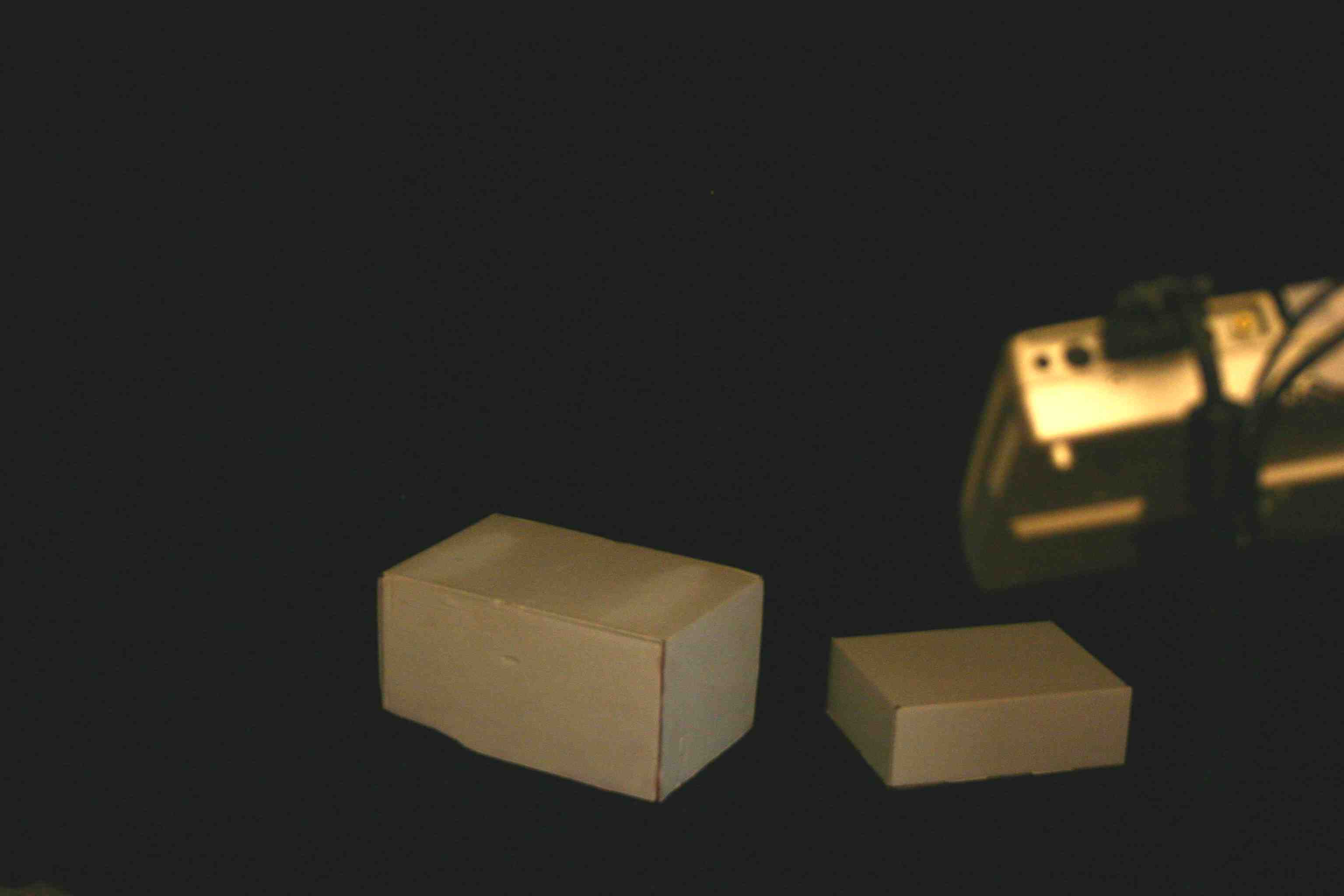

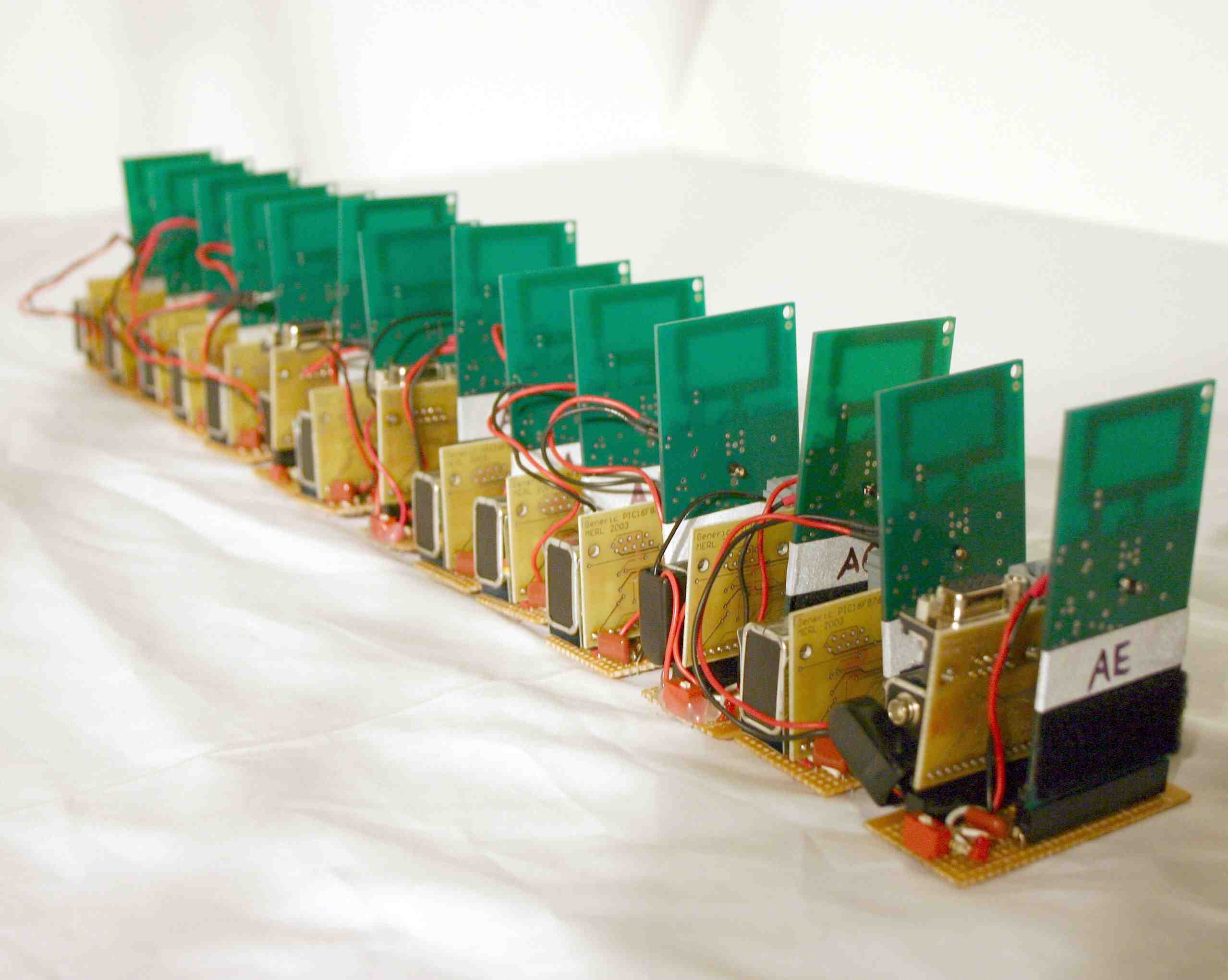

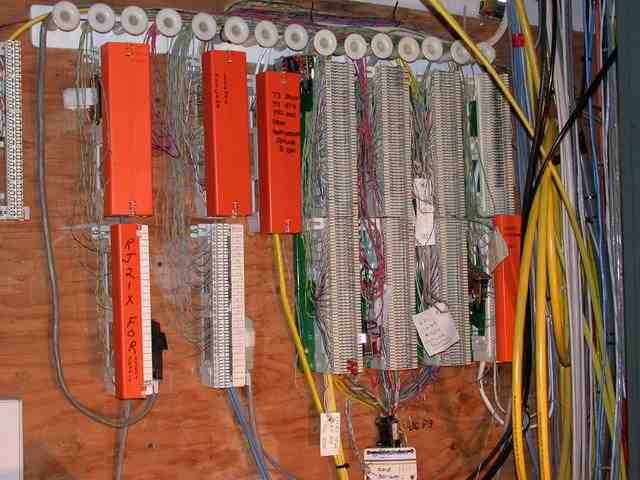

Prototype tags

and projector

Applications in

Surveillance, Libraries and Factory Robotics

(Left) Detecting an obstruction (such as person on the tracks near a platform, a disabled vehicle at a railroad intersection, or suspicious material on the tracks). Identifying an obstruction with a camera-based system is difficult, owing to the necessary complex image analysis under unknown lighting conditions. RFIG tags can be sprinkled along the tracks and illuminated with a fixed or steered beam of temporally modulated light (not necessarily a projector). Tags respond with the status of the reception of the modulated light. Lack of reception indicates an obstruction; a notice can then be sent to a central monitoring facility where a railroad traffic controller observes the scene, perhaps using a pan-tilt-zoom surveillance camera. (Middle) Books in a library. RF-tagged books make it easy to generate a list of titles within the RF range. However, incomplete location information makes it difficult to determine which books are out of alphabetically sorted order. In addition, inadequate information concerning book orientation makes it difficult to detect whether books are placed upside down. With RFIG and a handheld projector, the librarian can identify book title, as well as the book’s physical location and orientation. Based on a mismatch in title sort with respect to the location sort, the system provides instant visual feedback and instructions (shown here as red arrows for original positions). (Right) Laser-guided robot. Guiding a robot to pick a certain object in a pile of objects on a moving conveyor belt, the projector locates the RFIG-tagged object, illuminating it with an easily identifiable temporal pattern. A camera attached to the robot arm locks onto this pattern, enabling the robot to home in on the object.

Projector-based Interaction

Image

stabilization to compensate for handheld motion

Center

projector pixel is the mouse pointer while rest of image is stabilized

Scenes

cumbersome to inspect can be 'copied' along with data stored on tags,

then examined in offices by 'pasting' images

after removing geometric and photometric distortions

SIGGRAPH 2003 Paper, iLamps: Geometrically Aware and Self-Configuring Projectors [Link]

Projector links [Link]

Projector mailing list [Link]

Mitsubishi Electric Projectors [Link]

Media Coverage [Link] [Link] [Link] [Link]

High resolution versions of images above [Link]