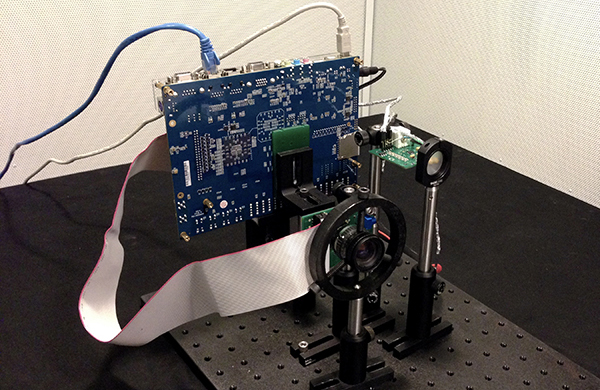

Learn how to assemble off-the-shelf components into an ultra-fast, time-of-flight camera. We provide step-by-step instructions on building your own 8 billion frames-per-second camera.

Build your own light-in-flight camera!

This website exists so you can reproduce MIT time of flight papers. For broader context, please see research from other institutions as well.

Frequently Asked Questions

What is nanophotograpy?

Nanophotography is a new technique that exploits coded, continuous wave illumination, where the illumination is strobed at nanosecond periods. The innovation relies on a mathematical technique called "sparse coding" that applies to optical paths. The end result: where a conventional camera measures only a single depth at each pixel, the Nanocamera is a multi-depth (or multi-range) camera. Two example applications are light sweep photography, which visualizes the propagation of light, and capturing 3D models of translucent objects.

What's a simple analogy to describe Nanophotography?

Many 3D systems are straightforward. Laser scanners, for instance, ping the scene with a pulse of light and measure the time it takes for the pulse to come back (this is also how LIDAR police scanners work). In Nanophotography, we embed a special code into the ping, so when the light comes back we obtain additional information beyond time of arrival.

What is new about nanophotography?

Nanophotography, is at its heart, a paper that addresses the mixed pixel problem in time of flight range imaging. A mixed pixel occurs when multiple optical paths of light smear at a given pixel. Think of a transparent sheet in front of a wall, which would lead to 2 measured optical paths. We propose a new solution to this problem that is a joint design between the algorithm and hardware. In particular, we customizes the codes used in the time of flight camera to turn the sparse inverse problem from an ill-conditioned into a better-conditioned problem.

How does Continuous Wave (CW) range imaging compare with Impulse based?

In impulse based imaging, a packet of photons is fired at the scene and synchronized with a timer --- the time of flight is literally measured. In CW range imaging, a periodic signal is strobed within the exposure time and subsequent computation allows us to extract the time of flight. CW offers some advantages, notably it does not require ultrafast hardware, and enjoys increased SNR because the signal is constantly transmitting within the exposure, The drawbacks of CW include increased computation and multipath interference. We address the latter in the technical paper.

Where does the name "nanophotography" come from?

The name comes from the light source that is used. In femtophotography a femtosecond pulse of light is sent to the scene. In nanophotography a periodic signal with a period in the nanosecond range is fired at the scene. By using a demodulation scheme, we are able to perform sub-nanosecond time profile imaging.

How will this technology transfer to the consumer?

A minor modification to commercial technology, such as Microsoft Kinect is all that is needed. In particular, the FPGA or ASIC circuit needs to be reconfigured to send custom binary sequences to the illumination and lock-in sensor. This needs to be combined with the software to perform the inversion. In general, time of flight cameras are improving in almost every dimension including cost, spatial resolution, time resolution, and form factor. These advances benefit the technique presented in nanophotography.

Why is this not currently used in industry?

Embedding custom codes, as we do, has pros and cons. The major cons include increased computation and ill-posed inverse problems. The former we can expect to be addressed by improvements in computational power and the latter we overcome by using sparse priors. This research prototype might influence a trend away from sinusoidal AMCW, which has been the de facto model in many systems beyond time of flight. More work is needed to make this production quality, but the early outlook is promising.