Demultiplexing Illumination via Low Cost Sensing and Nanosecond Coding

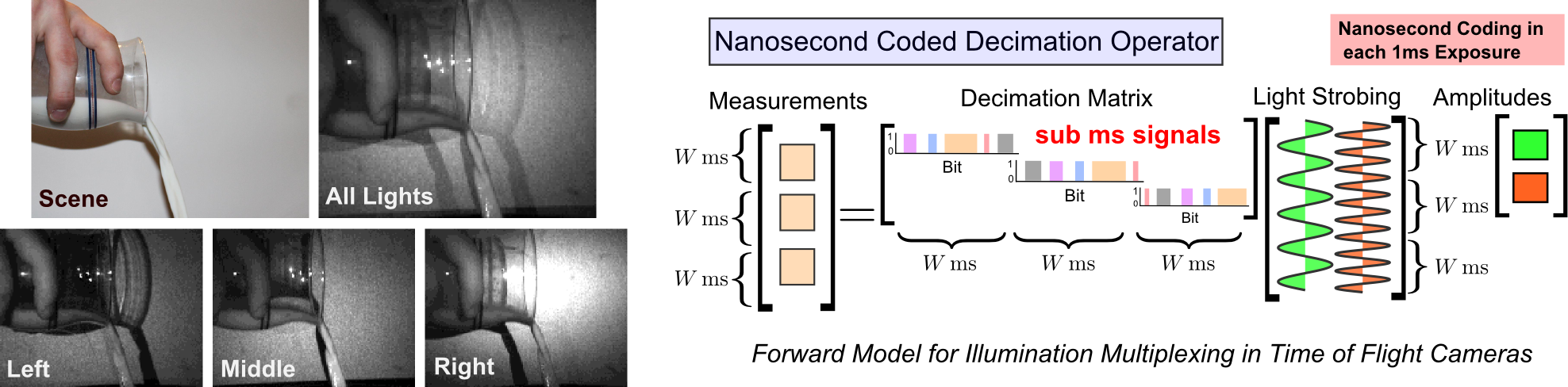

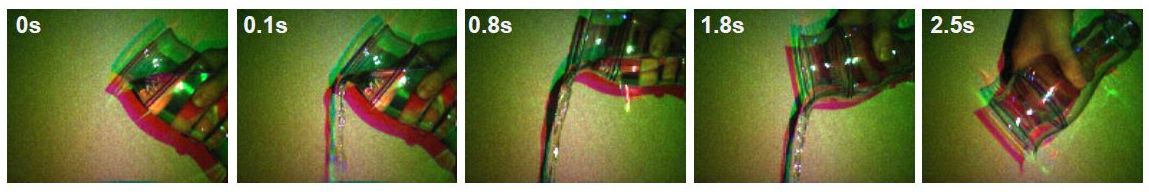

Figure 1: Demultiplexing Illumination with a Time of Flight Camera. The scene is in upper-left. A ToF camera is synced to 3 light sources; we measure all three light sources (upper-right) and can decompose as if

only one of the individual light sources was on. Note the distinction of shadows in the separated images.

|

|

Technical Paper

Demultiplexing Illumination via Low Cost Sensing and Nanosecond Coding, IEEE ICCP 2014 [PDF]

|

|

|

Abstract

Several computer vision algorithms require a sequence

of photographs taken in different illumination conditions,

which has spurred development in the area of illumination

multiplexing. Various techniques for optimizing the multiplexing

process already exist, but are geared toward regular

or high speed cameras. Such cameras are fast, but code

on the order of milliseconds. In this paper we propose a

fusion of two popular contexts, time of flight range cameras

and illumination multiplexing. Time of flight cameras are

a low cost, consumer-oriented technology capable of acquiring

range maps at 30 frames per second. Such cameras

have a natural connection to conventional illumination multiplexing

strategies as both paradigms rely on the capture of

multiple shots and synchronized illumination. While previous

work on illumination multiplexing has exploited coding

at millisecond intervals, we repurpose sensors that are ordinarily

used in time of flight imaging to demultiplex via

nanosecond coding strategies.

Files

Citation

Coming Soon

Applications

Single-chip RGBD Sensor

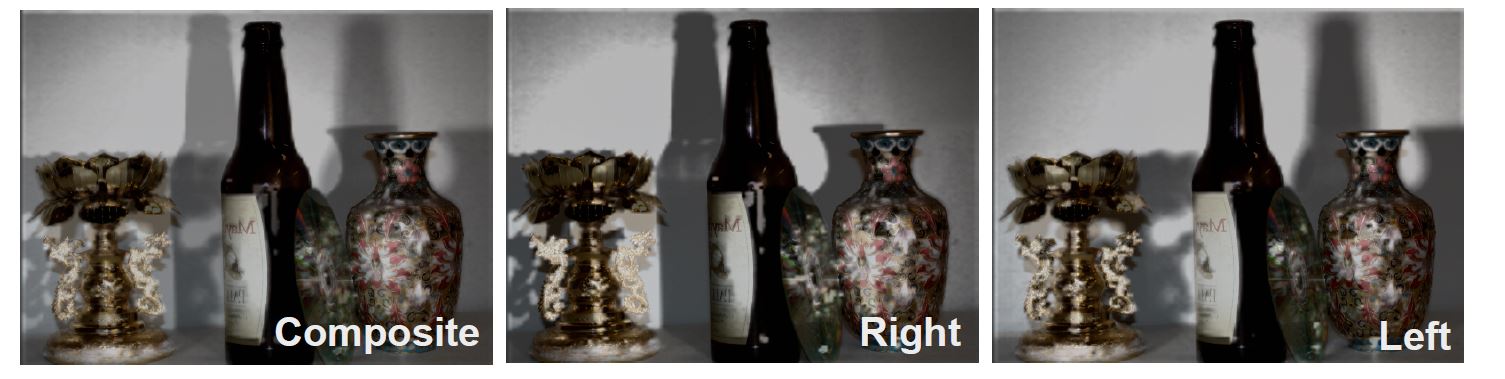

Figure 2: A color amplitude image from the Time of Flight camera. The same problem as Figure 1, except we multiplex red, green, and blue lights. This allows for single-chip color ToF

that does not sacrifice spatial resolution.

Usually, to obtain color and depth images, one would use a separate camera sensor. By multiplexing colored light sources, we demonstrate a single-chip RGBD sensor that does not trade spatial resolution

for "color pixels", and still works in real-time.

Scene Relighting

Figure 3: Relighting a scene post-capture. At left, in the composite image 2 lights are on at the same time, placed at the left and right of the scene.

We can demultiplex to obtain only the right light or the left light. Note the shadows.

What distinguishes a good photo from a bad one? If you ask LYTRO or light field theorists, they might say whether the subject is properly in focus. But many photographers, especially those that work in outdoor environments,

might argue that the character is in the lighting. Where LYTRO proposes "shoot now, focus later", we consider "shoot now, relight later". With 3D ToF sensors already being embedded into mobile platforms, our proposed techniuqe

may gain some measure of acceptance.

Acknowledgments

We thank Moshe Ben-Ezra and Boxin Shi for proofreading the manuscript and offering suggestions. We thank Hisham Bedri and Nikhil Naik for helpful discussions. Achuta Kadambi was supported by

a Draper Laboratory Fellowship.

Contact

For technical details contact:

Achuta Kadambi:

achoo@mit.edu

Frequently Asked Questions (FAQ)

What is the main contribution of this project?

Many computer vision algorithms require photographs of a scene under different light sources (e.g. photometric stereo). There are ways to optimize the collection process: this is the multiplexed illumination problem in computer vision.

However, existing work is tailored toward conventional cameras. We derive a multiplexing

model for Time of flight cameras, which are increasingly popular 3D cameras that form the basis for the new Microsoft Kinect.

What exactly is illumination multiplexing?

Suppose we have 3 lights, A, B, and C illuminating a scene. If we take a photograph, we measure a combination of the three lights (this is the forward model). The inverse problem is to capture images as if only a single light was on. This is not a new problem

and has been studied by Schechner's group at Technion (among others).

Why is illumination multiplexing tough for Time of Flight Cameras?

Time of Flight cameras measure range by frequency locking to a strobed illumination source and measuring the phase shift of the modulation signal. Such cameras are designed to lock-on to one light source at the modulation frequency.

What are some consumer applications of this work?

We validate our technique by constructing a prototype camera. One application is to multiplex colored light sources to create the only 3D color time of flight camera.

Does this work in real-time?

Yes. The results are demonstrated in real-time. See paper for details.

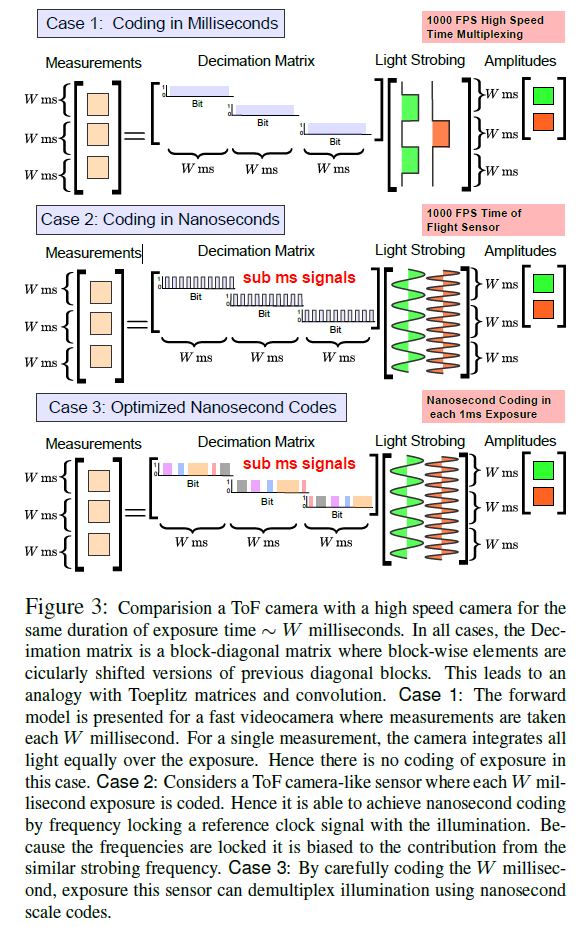

Appendix

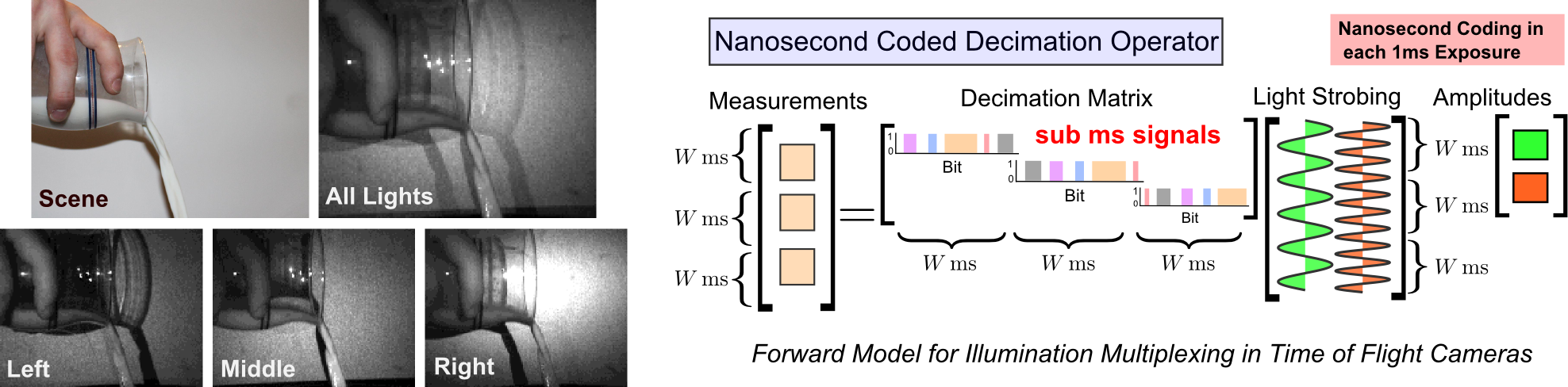

Multiplexing on time of flight sensors is a nanosecond coded problem. This figure is shows some visual math that compares Nanosecond and Millisecond multiplexing. Note the former exploits coding within the decimation operator.

The required physical hardware is very similar to the one described in http://nanophoto.info and http://media.mit.edu/~achoo/nanophotography.