A long-standing dream of artificial intelligence has been to put common sense

knowledge into computers-enabling machines to reason about everyday life. Some

projects, such as Cyc, have begun to amass large collections of such knowledge.

However, it is widely assumed that the use of common sense in interactive applications

will remain impractical for years, until these collections can be considered

sufficiently complete and common sense reasoning sufficiently robust. Recently,

at the MIT Media Lab, we have had some success in applying common sense knowledge

in a number of intelligent Interface Agents, despite the admittedly spotty coverage

and unreliable inference of today's common sense knowledge systems. This paper

will survey several of these applications and reflect on interface design principles

that enable successful use of common sense knowledge.

Henry Lieberman, Hugo Liu, Push Singh and Barbara Barry

Submitted to AI Magazine, 2004 (draft)

See a video of Henry's lecture on Applying Common Sense Reasoning in Interactive Applications (one hour, requires Realplayer)

See our Web pages giving an overview of the Media Lab's effort in Common Sense Computing!

Also, see the site for my course, "Common Sense Reasoning for Interactive Applications", particularly the student projects.

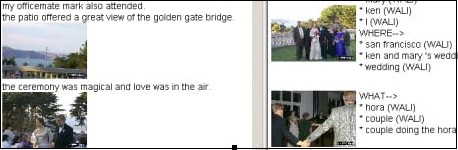

In a hypermedia authoring task, an author often wants to set up meaningful connections between different media, such as text and photographs. To facilitate this task, it is helpful to have a software agent dynamically adapt the presentation of a media database to the user's authoring activities, and look for opportunities for annotation and retrieval. However, potential connections are often missed because of differences in vocabulary or semantic connections that are "obvious" to people but that might not be explicit. ARIA (Annotation and Retrieval Integration Agent) is a software agent that acts an assistant to a user writing e-mail or Web pages. As the user types a story, it does continuous retrieval and ranking on a photo database. It can use descriptions in the story to semi-automatically annotate pictures. To improve the associations beyond simple keyword matching, we use natural language parsing techniques to extract important roles played by text, such as "who, what, where, when". Since many of the photos depict common everyday situations such as weddings or recitals, we use a common sense knowledge base, Open Mind, to fill in semantic gaps that might otherwise prevent successful associations.

Hugo Liu and Henry Lieberman,

Robust Photo Retrieval Using World Semantics

Proceedings of the 3rd International Conference on Language Resources And

Evaluation Workshop: Using Semantics for Information Retrieval and Filtering

(LREC2002) -- Canary Islands, Spain

Photos annotated with textual keywords can be thought of as resembling documents,

and querying for photos by keywords is akin to the information retrieval done

by search engines. A common approach to making IR more robust involves query

expansion using a thesaurus or other lexical resource. The chief limitation

is that keyword expansions tend to operate on a word level, and expanded keywords

are generally lexically motivated rather than conceptually motivated. In our

photo domain, we propose a mechanism for robust retrieval by expanding the concepts

depicted in the photos, thus going beyond lexical-based expansion. Because photos

often depict places, situations and events in everyday life, concepts depicted

in photos such as place, event, and activity can be expanded based on our "common

sense" notions of how concepts relate to each other in the real world.

For example, given the concept "surfer" and our common sense knowledge

that surfers can be found at the beach, we might provide the additional concepts:

"beach", "waves", "ocean", and "surfboard".

This paper presents a mechanism for robust photo retrieval by expanding annotations

using a world semantic resource. The resource is automatically constructed from

a large-scale freely available corpus of commonsense knowledge. We discuss the

challenges of building a semantic resource from a noisy corpus and applying

the resource appropriately to the task.

Hugo Liu, Henry Lieberman, and Ted Selker

A Model of Textual Affect Sensing using Real-World Knowledge

ACM Conference on Intelligent User Interfaces, January 2003, Miami, pp. 125-132.

Recipient of Outstanding Paper Award

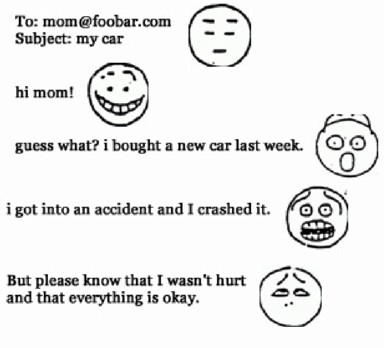

This paper presents a novel way for assessing the affective qualities of natural language and a scenario for its use. Previous approaches to textual affect sensing have employed keyword spotting, lexical affinity, statistical methods, and hand-crafted models. This paper demonstrates a new approach, using large-scale real-world knowledge about the inherent affective nature of everyday situations (such as “getting into a car accident”) to classify sentences into “basic” emotion categories. This commonsense approach has new robustness implications. Open Mind Commonsense was used as a real world corpus of 400,000 facts about the everyday world. Four linguistic models are combined for robustness as a society of commonsense-based affect recognition. These models cooperate and compete to classify the affect of text.

Hugo Liu, Ted Selker, Henry Lieberman

Visualizing the Affective Structure of a Text Document.

Conference on Human Factors in Computing Systems (CHI'03), Ft. Lauderdale, Florida,

April 2003.

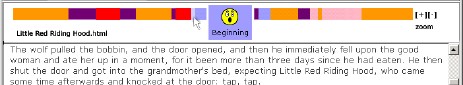

This paper introduces an approach for graphically visualizing the affective structure of a text document. A document is first affectively analyzed using a unique textual affect sensing engine, which leverages commonsense knowledge to classify text more reliably and comprehensively than can be achieved with keyword spotting methods alone. Using this engine, sentences are annotated using six basic Ekman emotions. Colors used to represent each of these emotions are sequenced into a color bar, which represents the progression of affect through a text document. Smoothing techniques allow the user to vary the granularity of the affective structure being displayed on the color bar. The bar is hyperlinked in a way such that it can be used to easily navigate the document. A user evaluation demonstrates that the proposed method for visualizing and navigating a document’s affective structure facilitates a user’s within-document information foraging activity.